Asklemmy

A loosely moderated place to ask open-ended questions

If your post meets the following criteria, it's welcome here!

- Open-ended question

- Not offensive: at this point, we do not have the bandwidth to moderate overtly political discussions. Assume best intent and be excellent to each other.

- Not regarding using or support for Lemmy: context, see the list of support communities and tools for finding communities below

- Not ad nauseam inducing: please make sure it is a question that would be new to most members

- An actual topic of discussion

Looking for support?

Looking for a community?

- Lemmyverse: community search

- sub.rehab: maps old subreddits to fediverse options, marks official as such

- !lemmy411@lemmy.ca: a community for finding communities

~Icon~ ~by~ ~@Double_A@discuss.tchncs.de~

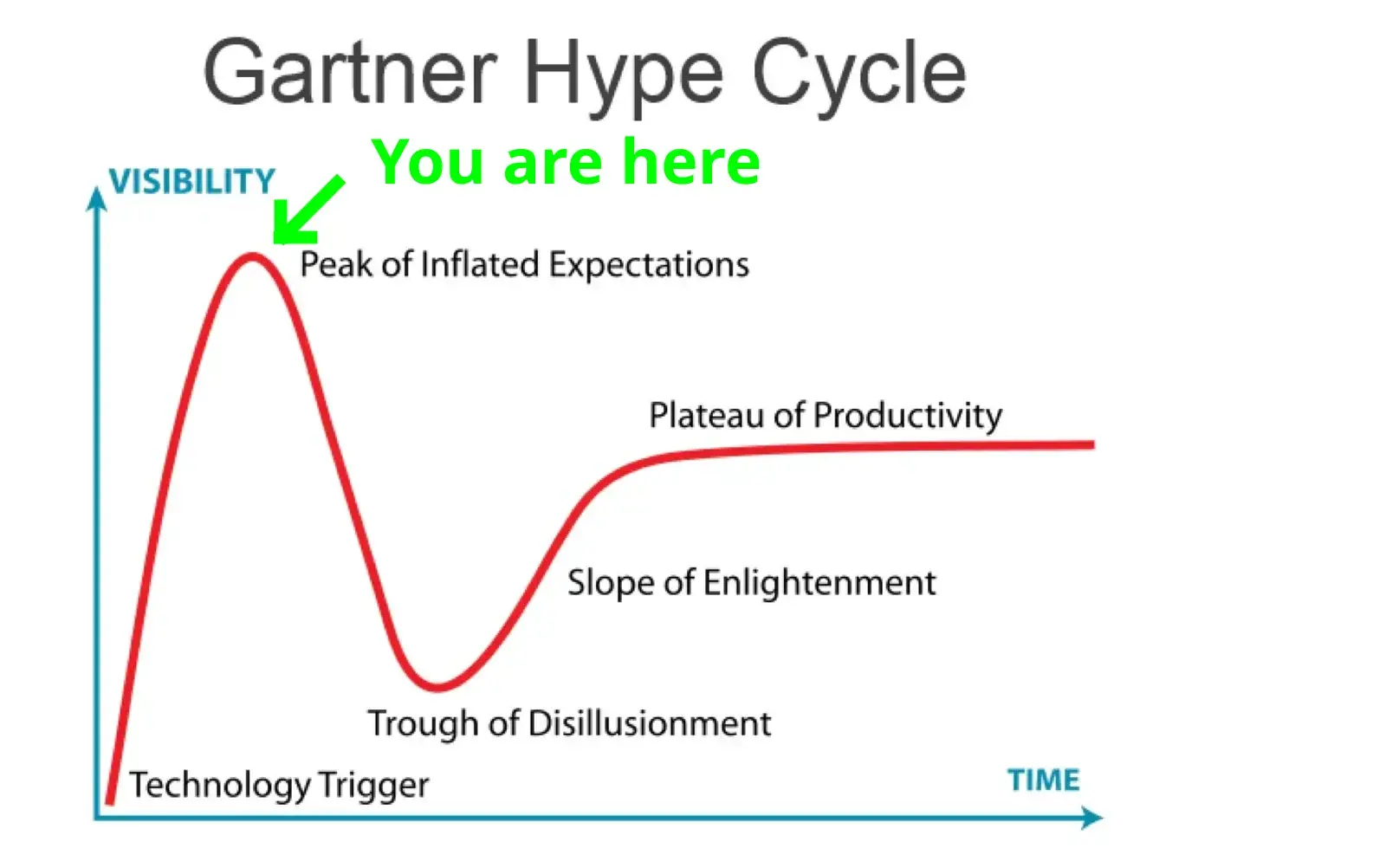

The trough of disillusionment is my favorite.

Kind of nice to see NFTs breaking through the floor at the trough of disillusionment, never to return.

Betteridge's law of headlines: No.

I'm not worried about AI replacing employees

I'm worried about managers and bean counters being convinced that AI can replace emplpyees

It'll be like outsourcing all over again. How many companies outsourced then walked back on it several years later and only hire in the US now? It could be really painful short term if that happens (if you consider severeal years to a decade short term).

Given the degree to which first-level customer service is required to stick to a script, I could see over half of call centers being replaced by LLMs over the next 10 years. The second level service might still need to be human, but I expect they could be an order of magnitude smaller than the first tier.

Copilot is just so much faster than me at generating code that looks fancy and also manages to maximize the number of warnings and errors.

There's a massive amount of hype right now, much like everything was blockchains for a while.

AI/ML is not able to replace a programmer, especially not a senior engineer. Right now I'd advise you do your job well and hang tight for a couple of years to see how things shake out.

(me = ~50 years old DevOps person)

I'm only on my very first year of DevOps, and already I have five years worth of AI giving me hilarious, sad and ruinous answers regarding the field.

I needed proper knowledge of Ansible ONCE so far, and it managed to lie about Ansible to me TWICE. AI is many things, but an expert system it is not.

Great advice. I would add to it just to learn leveraging those tools effectively. They are great productivity boost. Another side effect once they become popular is that some skills that we already have will be harder to learn so they might be in higher demand.

Anyway, make sure you put aside enough money to not have to worry about such things 😃

So, I asked Chat GPT to write a quick PowerShell script to find the number of months between two dates. The first answer it gave me took the number of days between them and divided by 30. I told it, it needs to be more accurate than that, so it wrote a while loop to add 1 months to the first date until it was larger than the 2 second date. Not only is that obviously the most inefficient way to do it, but it had no checks to ensure the one in the loop was actually smaller so you could just end up with zero. The results I got from co-pilot were not much better.

From my experience, unless there is existing code to do exactly what you want, these AI are not to the level of an experienced dev. Not by a long shot. As they improve, they'll obviously get better, but like with anything you have to keep up and adapt in this industry or you'll get left behind.

The thing is that you need several AIs. One to write the question so the one who codes gets the question you want answered. The. A third one who will write checks and follow up on the code written.

When ran in a feedback loop like this, the quality you get out will be much higher than just asking chathpt to make something

I don't think you are disturbed by AI, but y Capitalism doing anything they can to pay you as little as possible. From a pure value* perspective assuming your niche skills in c++ are useful*, you have nothing to worry about. You should be paid the same regardless. But in our society, if you being replaced by someone "good enough", will work for the business then yes you should be worried. But AI isn't the thing you should be upset by.

*This is obviously subjective, but the existence of AI with you troubleshooting vs fully replacing you is out of scope here.

This is a real danger in a long term. If advancement of AI and robotics reaches a certain level, it can detach big portion of lower and middle classes from the societys flow of wealth and disrupt structures that have existed since the early industrial revolution. Educated common man stops being an asset. Whole world becomes a banana republic where only Industry and government are needed and there is unpassable gap between common people and the uncaring elite.

Right. I agree that in our current society, AI is net-loss for most of us. There will be a few lucky ones that will almost certainly be paid more then they are now, but that will be at the cost of everyone else, and even they will certainly be paid less then the share-holders and executives. The end result is a much lower quality of life for basically everyone. Remember what the Luddites were actually protesting and you'll see how AI is no different.

I'm in IT and I don't believe this will happen for quite a while if at all. That said I wouldn't let this keep you up at night, it's out of your control and worrying about it does you no favours. If AI really can replace people then we are all in this together and we will figure it out.

AI is a really bad term for what we are all talking about. These sophisticated chatbots are just cool tools that make coding easier and faster, and for me, more enjoyable.

What the calculator is to math, LLM’s are to coding, nothing more. Actual sci-fi style AI, like self aware code, would be scary if it was ever demonstrated to even be possible, which it has not.

If you ever have a chance to use these programs to help you speed up writing code, you will see that they absolutely do not live up to the hype attributed to them. People shouting the end is nigh are seemingly exclusively people who don’t understand the technology.

I've never had to double check the results of my calculator by redoing the problem manually, either.

I use AI heavily at work now. But I don't use it to generate code.

I mainly use it instead of googling and skimming articles to get information quickly and allow follow up questions.

I do use it for boring refactoring stuff though.

In its current state it will never replace developers. But it will likely mean you need less developers.

The speed at which our latest juniors can pick up a new language or framework by leaning on LLMs is quite astounding. It's definitely going to be a big shift in the industry.

At the end of the day our job is to automate things so tasks require less staff. We're just getting a taste of our own medicine.

As an example:

Salesforce has been trying to replace developers with "easy to use tools" for a decade now.

They're no closer than when they started. Yes the new, improved flow builder and omni studio look great initially for the simple little preplanned demos they make. But theyre very slow, unsafe to use and generally are impossible to debug.

As an example: a common use case is: sales guy wants to create an opportunity with a product. They go on how omni studio let's an admin create a set of independently loading pages that let them:

• create the opportunity record, associating it with an existing account number.

• add a selection of products to it.

But what if the account number doesn't exist? It fails. It can't create the account for you, nor prompt you to do it in a modal. The opportunity page only works with the opportunity object.

Also, if the user tries to go back, it doesn't allow them to delete products already added to the opportunity.

Once we get actual AIs that can do context and planning, then our field is in danger. But so long as we're going down the glorified chatbot route, that's not in danger.

I think all jobs that are pure mental labor are under threat to a certain extent from AI.

It's not really certain when real AGI is going to start to become real, but it certainly seems possible that it'll be real soon, and if you can pay $20/month to replace a six figure software developer then a lot of people are in trouble yes. Like a lot of other revolutions like this that have happened, not all of it will be "AI replaces engineer"; some of it will be "engineer who can work with the AI and complement it to be produtive will replace engineer who can't."

Of course that's cold comfort once it reaches the point that AI can do it all. If it makes you feel any better, real engineering is much more difficult than a lot of other pure-mental-labor jobs. It'll probably be one of the last to fall, after marketing, accounting, law, business strategy, and a ton of other white-collar jobs. The world will change a lot. Again, I'm not saying this will happen real soon. But it certainly could.

I think we're right up against the cold reality that a lot of the systems that currently run the world don't really care if people are taken care of and have what they need in order to live. A lot of people who aren't blessed with education and the right setup in life have been struggling really badly for quite a long time no matter how hard they work. People like you and me who made it well into adulthood just being able to go to work and that be enough to be okay are, relatively speaking, lucky in the modern world.

I would say you're right to be concerned about this stuff. I think starting to agitate for a better, more just world for all concerned is probably the best thing you can do about it. Trying to hold back the tide of change that's coming doesn't seem real doable without that part changing.

I'm both unenthusiastic about A.I. and unafraid of it.

Programming is a lot more than writing code. A programmer needs to setup a reliable deployment pipeline, or write a secure web-facing interface, or make a useable and accessible user interface, or correctly configure logging, or identity and access, or a million other nuanced, pain-in-the-ass tasks. I've heard some programmers occasionally decrypt what the hell the client actually wanted, but I think that's a myth.

The history of automation is somebody finds a shortcut - we all embrace it - we all discover it doesn't really work - someone works their ass off on a real solution - we all pay a premium for it - a bunch of us collaborate on an open shared solution - we all migrate and focus more on one of the 10,000 other remaining pain-in-the-ass challenges.

A.I. will get better, but it isn't going to be a serious viable replacement for any of the real work in programming for a very long time. Once it is, Murphy's law and history teaches us that there'll be plenty of problems it still sucks at.

They haven't replaced me with cheaper non-artifical intelligence yet and that's leaps and bounds better than AI.

Yeah, the real danger is probably that it will be harder for junior developers to be considered worth the investment.

I'm a composer. My facebook is filled with ads like "Never pay for music again!". Its fucking depressing.

If you are afraid about the capabilities of AI you should use it. Take one week to use chatgpt heavily in your daily tasks. Take one week to use copilot heavily.

Then you can make an informed judgement instead of being irrationally scared of some vague concept.

Programming is the most automated career in history. Functions / subroutines allow one to just reference the function instead of repeating it. Grace Hopper wrote the first compiler in 1951; compilers, assemblers, and linkers automate creating machine code. Macros, higher level languages, garbage collectors, type checkers, linters, editors, IDEs, debuggers, code generators, build systems, CI systems, test suite runners, deployment and orchestration tools etc... all automate programming and programming-adjacent tasks, and this has been going on for at least 70 years.

Programming today would be very different if we still had to wire up ROM or something like that, and even if the entire world population worked as programmers without any automation, we still wouldn't achieve as much as we do with the current programmer population + automation. So it is fair to say automation is widely used in software engineering, and greatly decreases the market for programmers relative to what it would take to achieve the same thing without automation. Programming is also far easier than if there was no automation.

However, there are more programmers than ever. It is because programming is getting easier, and automation decreases the cost of doing things and makes new things feasible. The world's demand for software functionality constantly grows.

Now, LLMs are driving the next wave of automation to the world's most automated profession. However, progress is still slow - without building massive very energy expensive models, outputs often need a lot of manual human-in-the-loop work; they are great as a typing assist to predict the next few tokens, and sometimes to spit out a common function that you might otherwise have been able to get from a library. They can often answer questions about code, quickly find things, and help you find the name of a function you know exists but can't remember the exact name for. And they can do simple tasks that involve translating from well-specified natural language into code. But in practice, trying to use them for big complicated tasks is currently often slower than just doing it without LLM assistance.

LLMs might improve, but probably not so fast that it is a step change; it will be a continuation of the same trends that have been going for 70+ years. Programming will get easier, there will be more programmers (even if they aren't called that) using tools including LLMs, and software will continue to get more advanced, as demand for more advanced features increases.

You’re certainly not the only software developer worried about this. Many people across many fields are losing sleep thinking that machine learning is coming for their jobs. Realistically automation is going to eliminate the need for a ton of labor in the coming decades and software is included in that.

However, I am quite skeptical that neural nets are going to be reading and writing meaningful code at large scales in the near future. If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself. It’s reasonable to be concerned that employees will need to use these tools in the near future. That’s because these are new, useful tools and software developers are generally expected to use all tooling that improves their productivity.

If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

Bingo

I won't say it won't happen soon. And it seems fairly likely to happen at some point. But at that point, so much of the world will have changed because of the other impacts of having AI, as it was developing to be able to automate thousands of things that are easier than programming, that "will I still have my programming job" may well not be the most pressing issue.

For the short term, the primary concern is programmers who can work much faster with AI replacing those that can't. SOCIAL DARWINISM FIGHT LET'S GO

As someone with deep knowledge of the field, quite frankly, you should now that AI isn't going to replace programmers. Whoever says that is either selling a snake oil product or their expertise as a "futurologist".

Thought about this some more so thought I’d add a second take to more directly address your concerns.

As someone in the film industry, I am no stranger to technological change. Editing in particular has radically changed over the last 10 to 20 years. There are a lot of things I used to do manually that are now automated. Mostly what it’s done is lower the barrier to entry and speed up my job after a bit of pain learning new systems.

We’ve had auto-coloring tools since before I began and colorists are still some of the highest paid folks around. That being said, expectations have also risen. Good and bad on that one.

Point is, a lot of times these things tend to simplify/streamline lower level technical/tedious tasks and enable you to do more interesting things.

So far it is mainly an advanced search engine, someone still needs to know what to ask it, interpret the results and correct them. Then there's the task of fitting it into an existing solution / landscape.

Then there's the 50% of non coding tasks you have to perform once you're no longer a junior. I think it'll be mainly useful for getting developers with less experience productive faster, but require more oversight from experienced devs.

At least for the way things are developing at the moment.

i'm still in uni so i can't really comment about how's the job market reacting or is going to react to generative AI, what i can tell you is it has never been easier to half ass a degree. any code, report or essay written has almost certainly came from a LLM model, and none of it makes sense or barely works. the only people not using AI are the ones not having access to it.

i feel like it was always like this and everyone slacked as much as they could but i just can't believe it, it's shocking. lack of fundamental and basic knowledge has made working with anyone on anything such a pain in the ass. group assignments are dead. almost everyone else's work comes from a chatgpt prompt that didn't describe their part of the assignment correctly, as a result not only it's buggy as hell but when you actually decide to debug it you realize it doesn't even do what its supposed to do and now you have to spend two full days implementing every single part of the assignment yourself because "we've done our part".

everyone's excuse is "oh well university doesn't teach anything useful why should i bother when i'm learning ?" and then you look at their project and it's just another boilerplate react calculator app in which you guessed it most of the code is generated by AI. i'm not saying everything in college is useful and you are a sinner for using somebody else's code, indeed be my guest and dodge classes and copy paste stuff when you don't feel like doing it, but at least give a damn on the degree you are putting your time into and don't dump your work on somebody else.

i hope no one carries this kind of sentiment towards their work into the job market. if most members of a team are using AI as their primary tool to generate code, i don't know how anyone can trust anyone else in that team, which means more and longer code reviews and meetings and thus slower production. with this, bootcamps getting more scammy and most companies giving up on junior devs, i really don't think software industry is going towards a good direction.

Nobody knows if and when programming will be automated in a meaningful way. But once we have the tech to do it, we can automate pretty much all work. So I think this will not be a problem for programmers until it's a problem for everyone.

I use GitHub Copilot from work. I generally use Python. It doesn't take away anything at least for me. It's big thing is tab completion; it saves me from finishing some lines and adding else clauses. Like I'll start writing a docstring and it'll finish it.

Once in a while I can't think of exactly what I want so I write a comment describing it and Copilot tries to figure out what I'm asking for. It's literally a Copilot.

Now if I go and describe a big system or interfacing with existing code, it quickly gets confused and tends to get in the weeds. But man if I need someone to describe a regex, it's awesome.

Anyways I think there are free alternatives out there that probably work as well. At the end of the day, it's up to you. Though I'd so don't knock it till you try it. If you don't like it, stop using it.

I wish your fear were justified! I'll praise anything that can kill work.

Hallas, we're not here yet. Current AI is a glorified search engine. The problem it will have is that most code today is unmaintainable garbage. So AI can only do this for now : unmaintainable garbage.

First the software industry needs to properly industrialise itself. Then there will be code to copy and reuse.

I’ll praise anything that can kill work under UBI. Without reform, I worry the rich will get richer, the poor will get even poorer and it leads to guillotines in the square.

Have you seen the shit code it confidently spews out?

I wouldn't be too worried.

Well I seen, I even code reviewed without knowing, when I asked colleague what happened to him, he said "I used chatgpt, I'm not sure to understand what this does exactly but it works". Must confess that after code review comments, not much was left of the original stuff.

I'm gonna sum up my feelings on this with a (probably bad) analogy.

AI taking software developer jobs is the same thinking as microwaves taking chefs jobs.

They're both just tools to help you achieve the same goal easier/faster. And sometimes the experts will decide to forego the tool and do it by hand for better quality control or high complexity that the tool can't do a good job at.

I’m a 50+ year old IT guy who started out as a c/c++ programmer in the 90’s and I’m not that worried.

The thing is, all this talk about AI isn’t very accurate. There is a huge difference in the LLM stuff that ChatGPT etc. are built on and true AI. These LLM’s are only as good as the data fed into them. The adage “garbage in, garbage out” comes to mind. Anybody that blindly relies on them is a fool. Just ask the lawyer that used ChatGPT to write a legal brief. The “AI” made up references to non-existent cases that looked and sounded legitimate, and the lawyer didn’t bother to check for accuracy. He filed the brief and it was the judge that discovered the brief was a work of fiction.

Now I know there’s a huge difference between programming and the law, but there are still a lot of similarities here. An AI generated program is only going to be as good as the samples provided to it, and you’re probably want a human to review that code to ensure it’s truly doing what you want, at the very least.

I also have concern that programming LLMs could be targeted by scammers and the like. Train the LLM to harvest sensitive information and obfuscate the code that does it so that it’s difficult for a human to spot the malicious code without a highly detailed analysis of the generated code. That’s another reason to want to know exactly what the LLM is trained on.

Your job is automating electrons, and now some automated electrons are threatening your job.

I have to imagine this is similar to how farmers felt when large-scale machinery became widely available.

If you are truly feeling super anxious, feel free to dm me. Have released gen AI tech though admittedly only in that space for about a year and a half and... Ur good. Happy to get in depth about it but genuinely you are good for so many reasons that I'd be happy to expand upon.

Main point though for programmers will be it's expensive as fuck to get any sort of process going that will produce complex systems of code. And frankly I'm being a bit idealistic there. That's without even considering the amount of time. Love AI, but hype is massively misleading the reality of the tech.

I don't think software developers or engineers alone should be concerned. That's what people see all the time. Chat-GPT generating code and thinking it means developers will be out of a job.

It's true, I think that AI tools will be used by developers and engineers. This is going to mean companies will reduce headcounts when they realise they can do more with less. I also think it will make the role less valuable and unique (that was already happening, but it will happen more).

But, I also think once organisations realise that GPTx is more than Chat-GPT, and they can create their own models based on their own software/business practices, it will be possible to do the same with other roles. I suspect consultancy businesses specializing in creating AI models will become REALLY popular in the short to medium term.

Long term, it's been known for a while we're going to hit a problem with jobs being replaced by automation, this was the case before AI and AI will only accelerate this trend. It's why ideas like UBI have become popular in the last decade or so.

I'd like to thank you all for all your interesting comments and opinion.

I see a general trends not being too worried because of how the technology works.

The worrysome part being what capitalism and management can think but that's just an update of the old joke "A product manager is a guy that think 9 women can make a baby in 1 month". And anyway, if not that there will be something else, it's how our society is.

Now, I feel better, and I understand that my first point of view of fear about this technology and rejection of it is perhaps a very bad idea. I really need to start using it a bit in order to known this technology. I already found some useful use cases that can help me (get inspiration while naming things, generate some repetitive unit test cases, using it to help figuring out about well-known API, ...).

As a fellow C++ developer, I get the sense that ours is a community with a lot of specialization that may be a bit more difficult to automate out of existence than web designers or what have you? There's just not as large a sample base to train AIs on. My C++ projects have ranged from scientific modelling to my current task of writing drivers for custom instrumentation we're building at work. If an AI could interface with the OS I wrote from scratch for said instrumentation, I would be rather surprised? Of course, the flip side to job security through obscurity is that you may make yourself unemployable by becoming overly specialized? So there's that.

Your fear is in so far justified as that some employers will definitely aim to reduce their workforce by implementing AI workflow.

When you have worked for the same employer all this time, perhaps you don't know, but a lot of employers do not give two shits about code quality. They want cheap and fast labour and having less people churning out more is a good thing in their eyes, regardless of (long-term) quality. May sound cynical, but that is my experience.

My prediction is that the income gap will increase dramatically because good pay will be reserved for the truly exceptional few. While the rest will be confronted with yet another tool capitalists will use to increase profits.

Maybe very far down the line there is blissful utopia where no one has to work anymore. But between then and now, AI would have to get a lot better. Until then it will be mainly used by corporations to justify hiring less people.