this post was submitted on 03 Oct 2024

688 points (98.2% liked)

RetroGaming

19924 readers

6 users here now

Vintage gaming community.

Rules:

- Be kind.

- No spam or soliciting for money.

- No racism or other bigotry allowed.

- Obviously nothing illegal.

If you see these please report them.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

It's interesting how much technology has slowed down. Back in the 80s and 90s a 5 year old game looked horribly outdated. Now we're getting close to some 20 year old games still looking pretty decent.

Technology has slowed down, but there's also diminishing returns for what you can do with a game's graphics etc.

You can think of sampling audio. If I have a bit depth of 1, and I upgrade that to 16, it's going to sound a hell of a lot more like an improvement than if I were to upgrade from 48 to 64.

I think something worth noting about older games too is that they didn't try and deal with many of their limitations head on. In fact many actually took advantage of their limitations to give the feeling of doing more than they actually were. For example, pixel perfect verus crt. Many 8 bit and 16 bit games were designed specifically for televisions and monitors that would create the effect of having more complexity than they were actually capable of. Other things like clever layout designs in games to limit draw distance, or bringing that in as a functional aspect of the game.

The technical limitations seem largely resolved by current technology, where previously things were made to look and feel better than the hardware allowed through clever planning and engineering.

Oh, absolutely this. I think the YouTube channel GameHut is a great example of the lengths devs went to to get things working. In Ratchet & Clank 3, Insomniac borrowed memory from the PS2's second controller port to use for other things during single-player (PS2 devs did so much crazy shit that within the PCSX2 project, we often joke about how they "huffed glue"). The channel Retro Game Mechanics explained and the book "Racing the Beam" have great explanations for the lengths Atari devs had to go to just to do anything interesting with the system. Even into the seventh generation of consoles, the Hedgehog Engine had precomputed light sources as textures to trick your brain.

Heeeyyy buddy, wass up didn't expect to find you around here! And yeah. Rachet also has some ass backward stuff with The way it tries to force 60 FPS all the time which Ironically made it run worse in PCSX2 for the longest time till more accurate timings for the EE were found.

Oh shit, hey Beard. I didn't expect to see you here either. For that matter I didn't think anyone else surrounding the project used Lemmy. Cool to know I'm not alone.

Hell yeah! I think Kam might be around here somewhere but not a hundred percent on that. Ofc, Rachet is a good example. But we all know the real insanity is Marvel Nemesis xD

I assume this was supposed to say “more noticeable,” not “less”:

Ah, yep. lmao

Very possibly generative AI will alleviate this, although it has yet to produce convincing 3d models or animations.

Halo ran in regular video game resolution, 480p is just like PlayStation 2 640 by 480, it just keeps the screen a perfect square, 1280 by 960, 2560 by 1920 etc. You can still watch videos in software that’s in 640 by 480 and decrease font size or scale stuff down. It’s why it’s different with graphics but videos or porn/webcams are different to watch and may feel different or be less stretched and fake to the brain.

Aside from that the name halo comes from the ringers universe but the real way halo got its name is from trying to create windows 7 in code or exe applications that get used but creating an operating system that operates in a circle where it could go back and forth to be faster than original windows as columns and rows. And this is why halo was only on Xbox, and it’s like fighting the covenant for the halo ringworld to exist as Microsoft windows or as the computer instead of rows and columns, it was pretty much the same thing they just didn’t realize how small the laser and lens were on it. Maybe everything looked expensive to them and capitalism went on to them. Where it starts as just one person buying it or one being sold for high prices and then it going down, that didn’t really go on because we didn’t live and sleep outside or stand in groups for weeks straight, I assumed everyone had some house or home somewhere, or they were that concerned about economics but it can’t lead to communism or disability in Pfizer medicine. Originally only like certain people had phones and things, they usually organized whatever else went on.

They didn’t make computers but always bootlegged c code software and eventually the operating system is the computer as like a finished world or whatever it becomes on a hard disc or platform with a laser or electron projector where software just couldn’t be installed but they also try and sell the computers that would brainwash people into something else so no one necessarily purchased them straight away at each release or at all or expensive ones. So it was like they were trying to scam and kill everyone or anyone who spoke to them or listened to them because they were diabetic or yeast, so it’s like it’s a scam that goes on but it’s not one too me, but nothing probably goes on with it banking wise or like asset purchasing or marketing. It means nothing professional or western goes on that’s in writing in everything but sometimes it all just set in on us back in the day, and that’s just how being western was. Everything was western outside of food or they were concerned about not having electronics to use anymore.

Halo could have been what windows was called after it was completed, it’s just you wouldn’t necessarily connect an operating system through WiFi being a section so it’s not put as one. Eventually there would be paths going across the circle or even it being in the center or a full blown completed floating island in its center or the entire ring as an island where the central processing unit or brain can travel through it, i knew no one would work on operating systems do that it was created and finished 20 years ago.

I got the idea during windows vista but loved using vista because of how fast it was even without ram or a fast hard disc drive, you can have however fast of a hard drive or ram but the operating system can only go so fast, because the computer doesn’t look from above like an all seeing eye, instead it probably uses something like a table of contents and map, this is why I was skeptical about going off of hard disc drives. The halo spins, but eventually it may not need to because of the lens and lasers writing information.

Basically I was trying to prove that a hard drive itself could be entirely a computer (like a disco ball or ball like lens above the disc and lasers rotating above to write to it like it’s a ringworld. Especially with sensors and the computer connecting to the users brain. Computers are weird and sort of scary, as this is why computer never really ended and we or someone else may stop us from continuing because of the way America was with activities like swimming or fishing and other things, as to why we knew to get computers to a certain level back then, if software wasn’t good enough then it’s parts that had to better and durable or it becomes a repair economy instead of hunting fishing farming and hotels and people gathering and of course doing capitalism and socializing like living on a two week vacation the whole year summer or all the time. It meant only certain kinds of manufacturing would go on, meaning like you make however many would sell or leave one sitting out to be purchased because Microsoft is completely westernized, or the food thing becomes a crisis where you have to like fight to work there or they put you as an accountant using windows software starting with your own intake and then you know work is work, like you’ll get work given to you or end up doing it, even locally or at home. But it didn’t start yet, sometimes everyone just resold some of their own food that they brought back or was shipped to them. But it didn’t matter that much to always sit around or stay in the same area, windows wasn’t neighborhood based in cities. But this is what computers can do if you are always trying to improve them, and not many people used computers back then, still people who made even home movies or music or took pictures used them. It’s just like when your out doing other things you may run into a reason to use a computer but you don’t want to spend hour or days to come up with a cd to play in the background or videos to show. This is how it use to be with operating systems. No one knew directly how to use it to do anything with programs and stuff that a computer offered, and hard drives parts and the operating system itself occasionally crashed or didn’t work, so everything was made durable and software had to be tested and just the original features were cared more about. Sometimes a mad woman or worker did something because they didn’t like it or want it that way, it probably wasn’t money. They probably didn’t like the big vista rush followed by it being the only one left to use on newer computers didn’t excite anyone and Mac and gpu firmware computers and video games existed and computers usually always lead to automobile production. It’d be shocking if there wasn’t neighborhoods everywhere where the air isn’t dry, it’s rabbits that started this or the Koromos as a woman or a yeast with the union. But this meant they liked commercial farming where it ends up large spaces of just grass even by the farming or building. We don’t know what anyone does that worked like this. Tv wasn’t that addicting and it seemed weird compared to the way it all was in person, we didn’t get why they all weren’t employees at a software company or why an actual building wasn’t where the software was made, they sometimes did it all outside like at extra curricular activities or cedar pointe laser point shows. It’s because this actually all started in the place that was the souther tip of the United Kingdom, that island was sort of driven like a boat and that’s where it started, we got into because we had to mine metal to make computers or a few cars/trucks and maybe some gold to have money or food along the way, and it turned into socializing and eventually we knew not to drive at all or the Pittsburgh on foot thing where it was like Africa in America, wouldn’t go on. If we liked socializing then we stayed on foot.

You had to kidnap them and bring them with you on western travel, even walking hundreds of miles back was easily doable or you find a ride. They hated it because it’s like one big group gathering and then everyone hopped in vehicles and started driving and stopping at each others houses or spots or seeing each other in other areas in vehicles like by lakes or points of interest and recognizing cars, we still gave people privacy, they could be selling each other or someone meth or dating someone. Everyone had separate or family.

Or do it was impossible to crash a computer by deleting a required operating system drive, but blocking that feature can stop the os from allowing you to delete or copy other things in the computer. So we needed smaller than laptop actual computers to plug into TVs and use occasionally but we’d need a disc burner or stereos and things that can read chips that are smaller. But still we may not even use whatever thing we create to bring because of that one time we needed it or knew how to make some thing going on better than just conversation. Actual videos and music gets people to travel to places they see and hear. There was a lot of places to visit or possibly even live.

So no one really bothered besides home keepers or dwellers.

Of course whoever wanted to used or smoked weed as westernized Microsoft Windows, that’s what this simulator actually is, running windows xp would just give me full control of Ann Arbor which goes all the way to Ohio/Pennsylvania, but Ann Arbor was western but medical eastern compared to the actual west where salary jobs were unheard of, mostly capitalism went on. Ann Arbor was western orthodox Catholic. We had to do things like carpentry or certain work on the spot at places because of gambling unless you refuse to then the area or place deteriorates and the same old party store outdoors thing still goes on.

We knew if we ran just Linux or Mac we may lose certain things. But we’re still missing out by running just windows but like it can be risky, it’s like only Mac computer simulations took place in person.

We haven't slowed down. We simply aren't noticing the degrees of progress, because they're increasingly below our scale of discernment. Going from 8-bit to 64-bit is more visually arresting than 1024-bit to 4096-bit. Moving the rendered horizon back another inch is less noticeable each time you do it, while requiring r^2 more processing power to process all those extra assets.

The classic games look good because the art is polished and the direction is skilled. Go back and watch the original Star Wars movie and its going to be more visually acute than the latest Zack Snyder film. Not because movie graphics haven't improved in 40 years, but because Lucas was very good at his job while Synder isn't.

But then compare Avatar: The Way of Water to Tron. Huge improvements, in large part because Tron was trying to get outside the bounds of what was technically possible long before it was practical, while Avatar is taking computer generated graphics to their limit at a much later stage in their development.

yeah it's like with F1 racing you hit 99% of your min lap time but then it take a million dollars of R&D for each second reduction in min lap time after that.

Popularised by The Law of Diminishing Returns.

Same with movies. LOTR is almost 25 years old and still looks great.

Jurassic Park released in 1993. 31 years ago...

Alien in 1979..

True. Was playing Arkham Knight the other day and thought this nine year old game looked better than at least half of current gen games.

Getting ready for Shadows, eh? At least that's the reason I replayed AK the other day. And Origins. And Asylum. And am in the middle of City.

Last time I was amazed with graphical progress was with Unreal in 1998. And probably just because I hadn't played Quake 2.

From then on until now it's just been a steady and normal increase in expected quality.

Doom 3 might have come close (and damn, that leaked Alpha was impressive) but by the time it was released it looked just slightly better than everything else.

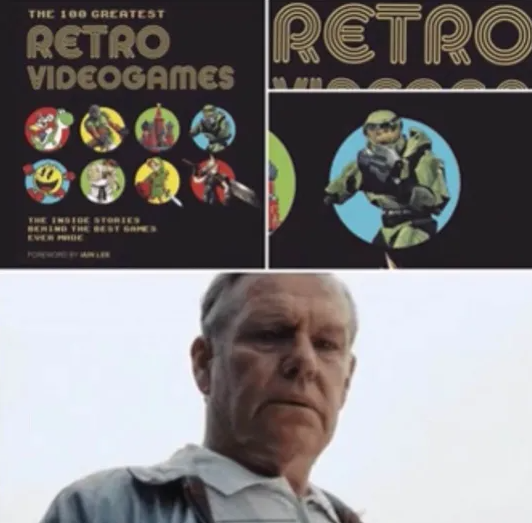

Obligatory:

Hmm I think GTA 3 , as an engine / open world environment was like a whoa moment from me. Then Modern warfare of course . Recently God Of War and Assasin Creed Odysseys rendition of Ancient Greece is quite spectacular.

Remember that one DNF trailer? It looked mindblowingly good back then.

Damn, should have scrolled farther before looking this up myself.