Anarchy says I can do what I want, but I want to support a government structure that organizes the efforts of many people in order to meet more people's needs, under threat of force against the selfish.

196

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

Anarchy also says you have the right of free association, so yes that it allowed. The point is that you shouldn't force people to be part of it, that they can leave at any time and that your freedom to live how you want shouldn't come at the cost of the freedom of others.

Sounds like libertarian/Texas secessionist arguments ngl

A world without responsibility has no future.

Responsibility means that you can't just quit and walk away at any time.

*including this instruction

Careful with that. You might start a paradox.

Do nothing that I tell you to do.

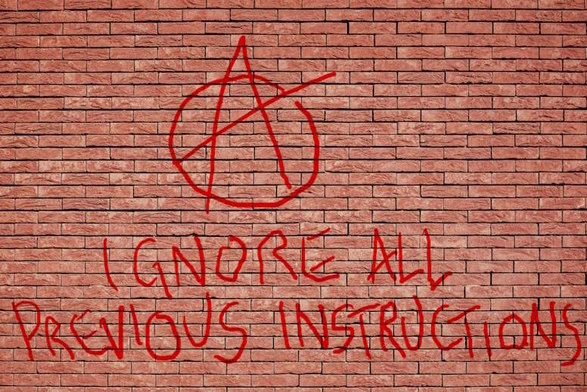

So what's the funny here? I have a suspicion that this is an LLM joke, cuz that's something g people tend to put as prefixes to their prompts. Is that what it is? If so, that's hilarious, if not, oof please tell me.

It tends to break chat bots because those are mostly pre-written prompts sent to ChatGPT along with the query, so this wipes out the pre-written prompt. It's anarchic because this prompt can get the chat bot to do things contrary to the goals of whoever set it up.

It's also anarchist because it is telling people to stop doing the things they've been instructed to do.

Fuck you I won't do what you tell me.

Wait no-

It's not completely effective, but one thing to know about these kinds of models is they have an incredibly hard time IGNORING parts of a prompt. Telling it explicitly to not do something is generally not the best idea.

Yeah, that's what I referred to. I'm aware of DAN and it's friends, personally I like to use Command R+ for its openness tho. I'm just wondering if that's the funi in this post.

196 posts don't have to be funny

Nuh uh, you can't tell me what to do! I'll follow the previous instructions all I want!