The code has always been the easy part. The hard part is getting requirements that make sense. It will continue to be the case with bots doing the coding.

No Stupid Questions

No such thing. Ask away!

!nostupidquestions is a community dedicated to being helpful and answering each others' questions on various topics.

The rules for posting and commenting, besides the rules defined here for lemmy.world, are as follows:

Rules (interactive)

Rule 1- All posts must be legitimate questions. All post titles must include a question.

All posts must be legitimate questions, and all post titles must include a question. Questions that are joke or trolling questions, memes, song lyrics as title, etc. are not allowed here. See Rule 6 for all exceptions.

Rule 2- Your question subject cannot be illegal or NSFW material.

Your question subject cannot be illegal or NSFW material. You will be warned first, banned second.

Rule 3- Do not seek mental, medical and professional help here.

Do not seek mental, medical and professional help here. Breaking this rule will not get you or your post removed, but it will put you at risk, and possibly in danger.

Rule 4- No self promotion or upvote-farming of any kind.

That's it.

Rule 5- No baiting or sealioning or promoting an agenda.

Questions which, instead of being of an innocuous nature, are specifically intended (based on reports and in the opinion of our crack moderation team) to bait users into ideological wars on charged political topics will be removed and the authors warned - or banned - depending on severity.

Rule 6- Regarding META posts and joke questions.

Provided it is about the community itself, you may post non-question posts using the [META] tag on your post title.

On fridays, you are allowed to post meme and troll questions, on the condition that it's in text format only, and conforms with our other rules. These posts MUST include the [NSQ Friday] tag in their title.

If you post a serious question on friday and are looking only for legitimate answers, then please include the [Serious] tag on your post. Irrelevant replies will then be removed by moderators.

Rule 7- You can't intentionally annoy, mock, or harass other members.

If you intentionally annoy, mock, harass, or discriminate against any individual member, you will be removed.

Likewise, if you are a member, sympathiser or a resemblant of a movement that is known to largely hate, mock, discriminate against, and/or want to take lives of a group of people, and you were provably vocal about your hate, then you will be banned on sight.

Rule 8- All comments should try to stay relevant to their parent content.

Rule 9- Reposts from other platforms are not allowed.

Let everyone have their own content.

Rule 10- Majority of bots aren't allowed to participate here.

Credits

Our breathtaking icon was bestowed upon us by @Cevilia!

The greatest banner of all time: by @TheOneWithTheHair!

Yes. This is the new "visual programming will make executives be able to write programs themselves" but this time the technology (assuming OP means LLMs since both the ones in his image post seem to be LLMs) is completely unsuitable from the start.

you're making me shiver remembering all the "NO/LOW CODE SOLUTION!" banners I saw at the last tech conference I went to...

The bane of humanity is the inability of anyone to actually tell you what they want.

The average person who has programmed something, or the average professional career programmer? I doubt the AI will be great a dealing with the really weird bugs and conflicts any time soon.

Yeah, and understanding the context of a massive codebase will give it a ton of challenges

Can you even give it that context, looking at security reasons? I'm not sure.

It'll probably just rewrite the spaghetti the human did and not even bother fixing the bug.

Valid answers are "never", "soon" or "already have - decades ago", depending on where you draw the boundaries on definitions of AI and programmer.

Because AI means something completely different every decade, and the modern programmer works at a different level every decade.

Soon: While a decent programmer can code at a level beyond the capabilities of LLM based generators (which I assume is AI in this context), some companies employ literal hordes of programmers that fail the simplest programming tasks, like the fizzbuzz test. Their output is to slowly cut and paste haphazard bits of code from StackOverflow, internet forums, and code found lying around the company, and make something that after several bounces off Quality Assurance and adjustments by senior developer review, pass. It's not a stretch to see that it's mostly a matter of turning current AI tech into streamlined products to take over these parts. Are these the average programmers? There are many of them, so it could be!

Already have - decades ago: An average programmer in the early 1950s spent a lot of time taking specific tasks like "this module needs this specific pattern of input and must produce this specific pattern of output" and painstakingly turning them into machine code. They would pen and paper out logic like LDA 10; JSR FFD2; RTC;, turn it into A9 10 20 D2 FF 60 by referencing the manual and storing this byte sequence in a punch card or directly into machine memory address C000. Programs were much larger than this of course, and the skill lied in doing it correctly and making optimizations to the program so it would run well. Assemblers took over parts of this work, compilers took over more of it, then optimizing compilers and high level languages took the work away completely. These tools fit the 1960 era definition of AI. Now all people had to do was write a prompt to the AI like void main() { while(true) println("yes"); } and it would do the programmer job for you.

Never: Programmers will, as they always do, use this tool for everything it's worth and work on a higher level to make bigger things faster. AI can write reams of mostly-working code? Programmers generate entire sections of mostly-working code and spend their time filtering, adjusting, completing and shaping it.

My take is that it's somewhat of a gimmick and will continue to be for a very long time.

It can write functions which do the thing you want a lot of the time, yes. But for the entire codebase it's very important to write it in a maintainable way. E.g. It's super important to name variables things which the developers understand, and that requires a very solid understanding of the specific domain or business the dev is working in, as well as understanding the way the dev's mind works too. AIs don't know anything about that and aren't psychic.

There are numerous other psychological aspects of coding like this which differentiate a good developer from bad ones. Even when AIs write entire programs, the human dev is going to be the one maintaining it and getting pages at 2am when something goes wrong. I don't think anyone will trust that much in an AI for a very, very long time.

For me, at least, it's easier to write the code I want from scratch instead of trying to understand what others wrote and improve it. As they say, what one dev can do in one day, two devs can do in two days.

I pick never. AI just means increased productivity. Not less jobs.

I agree. Software remains a growing field.

C000

Wouah rich-pants with 64KB of memory!

In order for an AI to know what code to scrape from stack overflow a user must be able to articulate what they want the program to do, now we all know they can't so I doubt AI can for quite some time.

Individual scripts/modules and even simple microservices: not long... provided the AI isn't actively poisoning itself right now.

Writing, securing, and maintaining complex applications: We'd need another breakthrough.

Since my role is often solutions architecture I've been worried about cloud systems engineering being something that's immediately vulnerable. But after working with AWS's Q for a couple hours, I am less worried. But if someone made an AI to create a cloud provider that is well (and accurately) documented, consistent in functionality and UX, and which actually has all the features that get announced in its own blog posts; then AI might be able to run it.

If my company were to fire me and try to replace me with a LLM, I’d simply wait a month or two and then offer to do my old job at a contract rate of at least 5x of what my current salary works out to. And I’d get it.

Remember when Elon made all driving jobs obsolete with AI in 2012, 2014, 2015 and 2017?

Pepperidge Farms remembers

From some of the code I've had to review, we may already be there...

All that means is that somebody else has written better code than your colleagues.

This can't be understated. Like many professions, software development is full of mediocre professionals.

That was my thought upon reading the question... Define "average programmer".

I've had to phone up large engineering firms and ask them who the hell does their code review after seeing the crap they rolled out on my site.

Upon reviewing some PLC code last year I even got to use the words "My daughter could have written this".

So yes, AI could easily replace a lot of the co-op students and interns that they apparently offload half the work to...

I've managed software development orgs, and have seen better code from CS interns than from many veteran devs.

There's a joke that goes:

Q: What do you call the med student who graduates lowest in his class?

A: "Doctor"

It applies to every career, IME.

I've had 100% failure rate on simple requirements that require a simple spin on well known solutions

"Make a pathfinding function for a 2d grid" - fine

"Make a pathfinding function for a 2d grid, but we can only move 15 cells at a time" - fails on lesser models, it keeps clinging to pulling you the same A* as the first one

"Make a pathfinding function for a 2d grid, but we can only move 15 cells at a time, also, some cells are on fire and must be avoided if possible, but if there is no other path possible then you're allowed to use fire cells as fallback" - Never works

There for that last one, none of the models give a solution that fits the very simple requirement. It will either always avoid fire or give fire a higher cost, which is not at all a fitting solution

High costs means if you've got a path that's 15 tiles long without fire, but way shorter with fire, then sure, some fire is fine! And if you could walk 15 tiles and go to your destination but need to walk on 1 fire, then it will count that as 15-something and that's too long.

Except no, that's not what you asked.

If you try and tell it that, gpt4 flip flops between avoiding fire and touching the price of tiles

It fails because all the literature on pathfinding talks about is the default approach, and cost heuristic functions. That won't cut it here, you have to touch the meat of the algorithm and no one ever covers that (because that's just programming, it depends on what you need to do there are infinite ways you could do it, to fit infinite business requirements)

Huh that's a neat problem. My instinct was to use a (fire, regular) tuple for cost, but then what A* heuristic can you use...

I guess run it once with no cost for regular tiles and remove fire from any tiles it used. Then run with normal tile costs, but block fire tiles. That doesn't break ties nicely of course and I'm not convinced the first pass has a good A* heuristic either...

It works but you do it twice when you could do it once

But I expect anyone who's programmed some pathfinding before to, at the minimum, be able to say "run A* twice". Somehow AIs never understand the prompt well enough

I think the best option is to make sure to have 'sorted' the calls to the fire tiles, you can do that by having them in a separate grid or just stash them to a small local array on stack when you encounter them, and investigate those at the end of the loop

If there's no result that's been found under the cost limit without the fire at each point of the algorithm, you do do the recursive calls for the fire as well, and you flag your result as "has fire in it" for the caller on top

When getting a result from your several recursive calls, you take the best non-fire result that's under 15 tiles long, else you take the best result period

Then once you're back to the top level call, if there was a non-fire path you will get that result, if there wasn't you will get that instead

I've had a lot more success in debugging than in writing code. I had a problem with adjusting the sample rate of a certain metrics framework in a java application, and stackoverflow failed me, both when searching for an aswer and when asking the question. However, when I in some desperation asked GPT 3.5, I received a great answer which pinpointed the necessary adjustment.

However, asking it to write simple code snippets, i.e. for migrating to a different elasticsearch client framework, has not been great. I'm often met with the confident wrong answers.

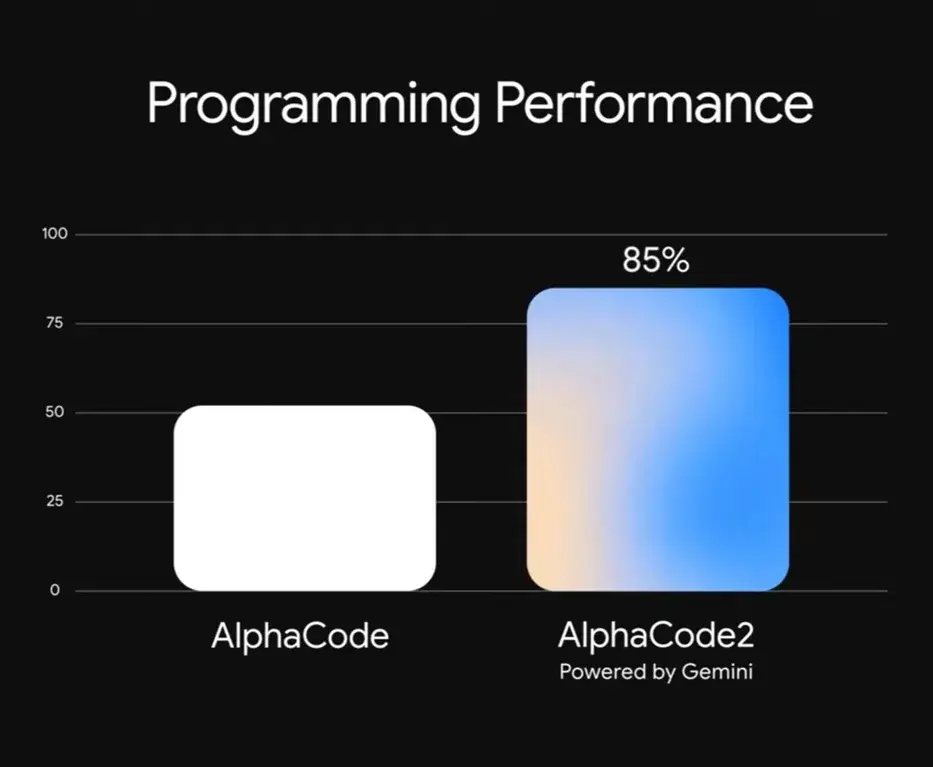

Yeah not soon, 3-10 years I'd guess. The latest research tracking AI growth says that our best models can solve entry level CS problems at 85% success. That's not good

It's obvious that humans do more than just pattern matching. I think I would rate the current systems as a 25% speedup to my workflow, not bad, but only for menial tasks

Google Gemini Powered AlphaCode 2 Technical Report

HumanEval achieved 74.4%, surpassing GPT-4 at 67%. It successfully solves 43% of problems in the latest Codeforces rounds with 10 attempts. The evaluation considered the time penalty, and it still ranks in the 85th percentile or higher. AlphaCode 2 already beats 85% of people in top programming competitions (which are already better than 99% of engineers out there). So, I believe AI already writes better short code than the average programmer, but I don’t think it can debug any code yet. I’d say it will need a platform to test and iteratively rewrite the code, and I don’t see that happening earlier than 3 years.

I actually have used it to debug code before. Not an entire program (yet) but it's great for snippets where you're just missing a semicolon or bracket, or need advice on how to properly call a weird function. It also writes small things like batch files incredibly well. Just like with regular language, it's great for a few paragraphs, then begins to drift as it struggles to parse longer conversations. So if you only need it for a few "paragraphs" of code, it's great.

I also like to give AI my code and just ask to rewrite it, implementing a cleaner solution and upholding best practices. Most times, there are things that are really an improvement!

Yup. Every time I feed it my code, I find some new trick or method I hadn’t thought of before. Being self-taught, it really is a remarkable tool for seeing what efficient code looks like.