this post was submitted on 27 Mar 2024

1482 points (100.0% liked)

196

16743 readers

2410 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

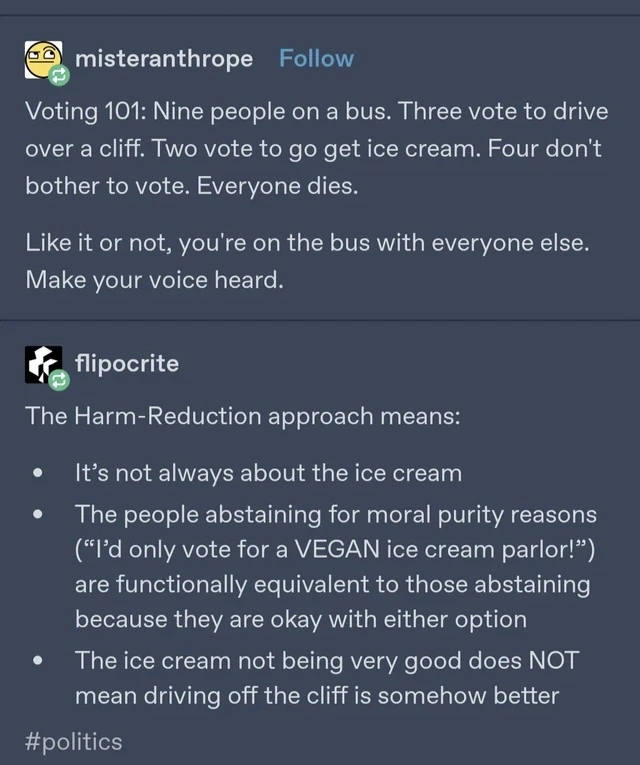

I'm doing the moral calculations. Fuck Biden, but fuck Trump more. If I vote for Biden, millions suffer. If I vote for Trump (or waste my vote so he wins), even more suffer. Doesn't take too much evaluating to pick being slapped in the face vs bring punched in the face.

I absolutely support the undecided campaign in the primaries to put pressure on Biden, but putting pressure on him and the DNC by letting Trump be in charge for another 4 years is a terrible idea. Even without Trump in office the GOP is quickly eroding even the semblance of democracy we've had for a while, but with 4 years of Trump accelerating that I think this would be the last time my vote even slightly matters. Then I'd also need to evacuate from the country because I'm trans and don't want them ruining my life. I'm voting for Biden in the general to avoid that, and then afterwards I guess I have to figure out what I can even do about the still-evolving bad situation that I helped successfully slow.

If reducing suffering and not letting an impossible perfect be the enemy of the better is amoral, I don't understand your definition of moral. I'm a utilitarian if you couldn't tell.

> I’m a utilitarian if you couldn’t tell.

oh my. how do you deal with the fact that the future is unknowable, so the morality of all actions is also unknowable?

I account for that, obviously. Expected value is a good approximation.

to be clear, you acknowledge that you can't know which actions are moral under your system, but you still rely on it to make moral actions?

There's always uncertainty, yes. I suppose other moral systems claim they're infallible but those people are just kidding themselves.

a deontological system places the morality in the action itself, so you know before you do it whether its the right thing to do. ontological systems change the morality of the action depending on the results in the future.

what if we need trump to be elected in order to escape earth before the sun goes nova? it's an unknowable proposition, but are you willing to risk all of humanity on voting for biden?

If you can convince me voting for Trump will give greater expected value then I'll do it, but such absurd possibilities like you said usually come with an exact inverse that cancels out its expected value.

Should I let that butterfly flap its wings? What if it causes a tornado somewhere?! Or, what if it not flapping causes a tornado somewhere?! Both are equally plausible, so there's no point in choosing my actions based on them.

I think you understand the problem of the unknowableness of the effects of our actions, and subsequently how absurd it is to use that as a basis of our morality.

I'm not trying to get you to vote for trump, I'm trying to get you to choose a useful moral framework.

This is useful though. Pretending there's no uncertainty is just kidding yourself.

the uncertainty shifts within the framework from whether my actions will have a good out come to whether i know what actions are moral. i suppose it's possible that i might not know, but the categorical imperative is pretty easy to apply, so my confidence is much higher than i imagine is possible for any action within a utilitarian frame: you are totally dependent on unknowable circumstances to determine the morality of past actions.

I want good outcomes, not the feeling of personal moral purity. Outcomes are inherently uncertain. You can say "murder bad, no uncertainty", but that still leaves the outcome, the part I care about, uncertain.

If I wanted moral certainty above all else, I could just say everything's moral.