The number refers to the number of sections the fold(s) create, not the number of folds.

Example: Bifold and Trifold wallets.

The number refers to the number of sections the fold(s) create, not the number of folds.

Example: Bifold and Trifold wallets.

Huh? Each hinge will be doing exactly the same thing as every other foldable for the last 5 years.

Why would it need to lock? It's no different than a normal foldable in that regard, the hinges are firm and hold in the position you put it.

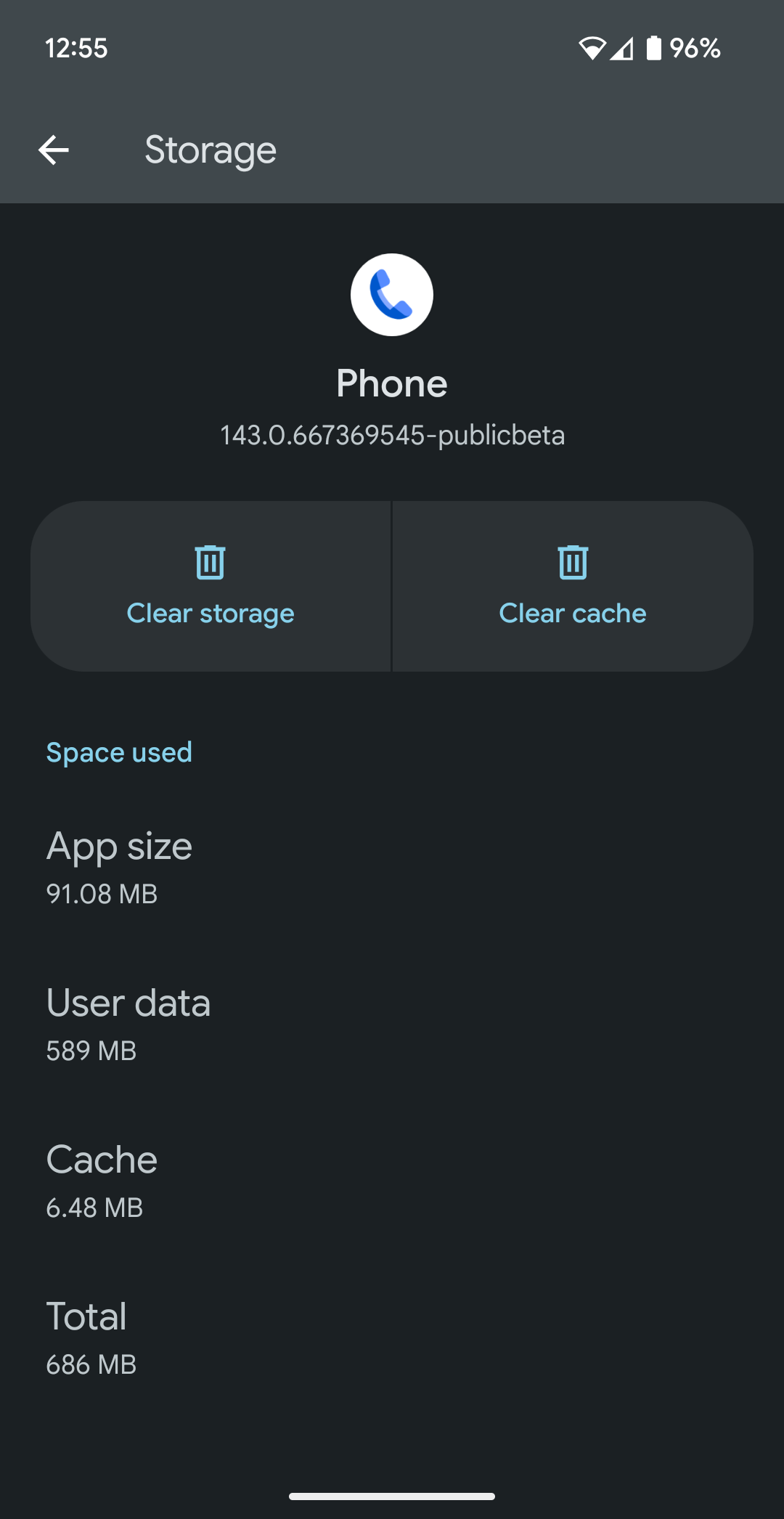

the app itself (GUI) and the integration of those services are responsible of the size of the app.

That's < 100MB for me.

It's a drop in the bucket though. I'd be shocked if it was more than 1%. So it doesn't explain anything.

So its basically "telemetry"

There's just no way. Telemetry are just text logs and should never take more than a couple MB. They are also going to be deleted routinely and frequently after being sent to the server.

I'm going to be honest, I actually know a lot more than I can say on this matter. But believe me Gemini Nano is a multimodal LLM.

I spoke to Google engineers about this a few months ago:

I don't run GrapheneOS. Convenient you are all of the sudden too lazy to prove you are right or have a clue about what you are saying.

You are aware that those are often called LMMs, Large Multimodal Model. And one of the modes that makes it multi-modal is Language. All LMMs are or contain an LLM.

When are you going to admit you have no idea what you are talking about?

An LLM literally is a "general AI model that powers a variety of tasks".

You could show a single advertised feature working...

I realized this completely misses the point, but the problem is actually dust not water. All these foldables are IPX8, meaning they are highly water resistant but not rated for dust and debris.

I completely agree with your repairability point btw.