this post was submitted on 05 Feb 2024

639 points (87.7% liked)

Memes

45151 readers

1871 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

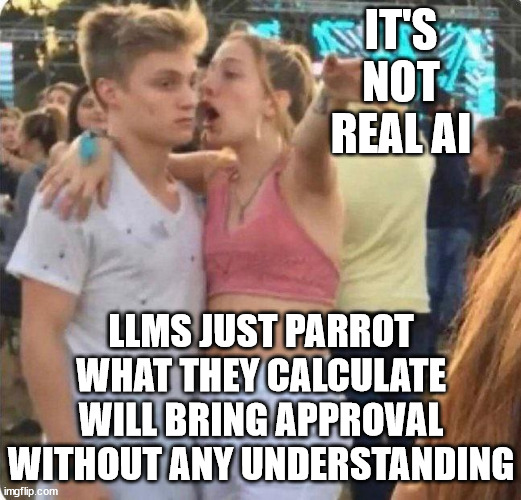

Yeah this sounds about right. What was OP implying I’m a bit lost?

I believe they were implying that a lot of the people who say "it's not real AI it's just an LLM" are simply parroting what they've heard.

Which is a fair point, because AI has never meant "general AI", it's an umbrella term for a wide variety of intelligence like tasks as performed by computers.

Autocorrect on your phone is a type of AI, because it compares what words you type against a database of known words, compares what you typed to those words via a "typo distance", and adds new words to it's database when you overrule it so it doesn't make the same mistake.

It's like saying a motorcycle isn't a real vehicle because a real vehicle has two wings, a roof, and flies through the air filled with hundreds of people.

I've often seen people on Lemmy confidently state that current "AI" thinks and learns exactly like humans and that LLMs work exactly like human brains, etc.

Weird, I don't think I've ever seen that even remotely claimed.

Closest I think I've come is the argument that legally, AI learning systems are similar to how humans learn, namely storing information about information.

It's usually some rant about "brains are just probability machines as well" or "every artists learns from thousands of pictures of other artists, just as image generator xy does".

Are you sure this wasn't just people stating that when it comes to training on art there is no functional difference in the sense that both humans and AI need to see art to make it?

Do you mean in the everyday sense or the academic sense? I think this is why there's such grumbling around the topic. Academically speaking that may be correct, but I think for the general public, AI has been more muddled and presented in a much more robust, general AI way, especially in fiction. Look at any number of scifi movies featuring forms of AI, whether it's the movie literally named AI or Terminator or Blade Runner or more recently Ex Machina.

Each of these technically may be presenting general AI, but for the public, it's just AI. In a weird way, this discussion is sort of an inversion of what one usually sees between academics and the public. Generally academics are trying to get the public not to use technical terms loosely, yet here some of the public is trying to get some of the tech/academic sphere to not, at least as they think, use technical terms loosely.

Arguably it's from a misunderstanding, but if anyone should understand the dynamics of language, you'd hope it would be those trying to calibrate machines to process language.

Well, that's the issue at the heart of it I think.

How much should we cater our choice of words to those who know the least?

I'm not an academic, and I don't work with AI, but I do work with computers and I know the distinction between AI and general AI.

I have a little irritation at the theme, given I work in the security industry and it's now difficult to use the more common abbreviation for cryptography without getting Bitcoin mixed up in everything.

All that aside, the point is that people talking about how it's not "real AI" often come across as people who don't know what they're talking about, which was the point of the image.

I believe OP is attempting to take on an army of straw men in the form of a poorly chosen meme template.

No people say this constantly it's not just a strawman

I think OP implied that AI is neat.

I guess that no matter what they are or what you call them they still can be useful

Pretty sure the meme format is for something you get extremely worked up about and want to passionately tell someone, even in inappropriate moments, but no one really gives a fuck

People who don't understand or use AI think it's less capable than it is and claim it's not AGI (which no one else was saying anyways) and try to make it seem like it's less valuable because it's "just using datasets to extrapolate, it doesn't actually think."

Guess what you're doing right now when you "think" about something? That's right, you're calling up the thousands of experiences that make up your "training data" and using it to extrapolate on what actions you should take based on said data.

You know how to parallel park because you've assimilated road laws, your muscle memory, and the knowledge of your cars wheelbase into a single action. AI just doesn't have sapience and therefore cannot act without input, but the process it does things with is functionally similar to how we make decisions, the difference is the training data gets input within seconds as opposed to being built over a lifetime.

People who aren't programmers, haven't studied computer science, and don't understand LLMs are much more impressed by LLMs.

That's true of any technology. As someone who is a programmer, has studied computer science, and does understand LLMs, this represents a massive leap in capability. Is it AGI? No. Is it a potential paradigm shift? Yes. This isn't pure hype like Crypto was, there is a core of utility here.

Crypto was never pure hype either. Decentralized currency is an important thing to have, it's just shitty it turned into some investment speculative asset rather than a way to buy drugs online without the glowies looking

Crypto solves a few theoretical problems and creates a few real ones

Yeah I studied CS and work in IT Ops, I'm not claiming this shit is Cortana from Halo, but it's also not NFTs. If you can't see the value you haven't used it for anything serious, cause it's taking jobs left and right.

In my experience it's the opposite, but the emotional reaction isn't so much being impressed as being afraid and claiming it's just all plagiarism

If you've ever actually used any of these algorithms it becomes painfully obvious they do not "think". Give it a task slightly more complex/nuanced than what it has been trained on and you will see it draws obviously false conclusions that would be obviously wrong had any thought actual taken place. Generalization is not something they do, which is a fundamental part of human problem solving.

Make no mistake: they are text predictors.