this post was submitted on 20 Jan 2024

123 points (100.0% liked)

Data Is Beautiful

6878 readers

1 users here now

A place to share and discuss data visualizations. #dataviz

(under new moderation as of 2024-01, please let me know if there are any changes you want to see!)

founded 3 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

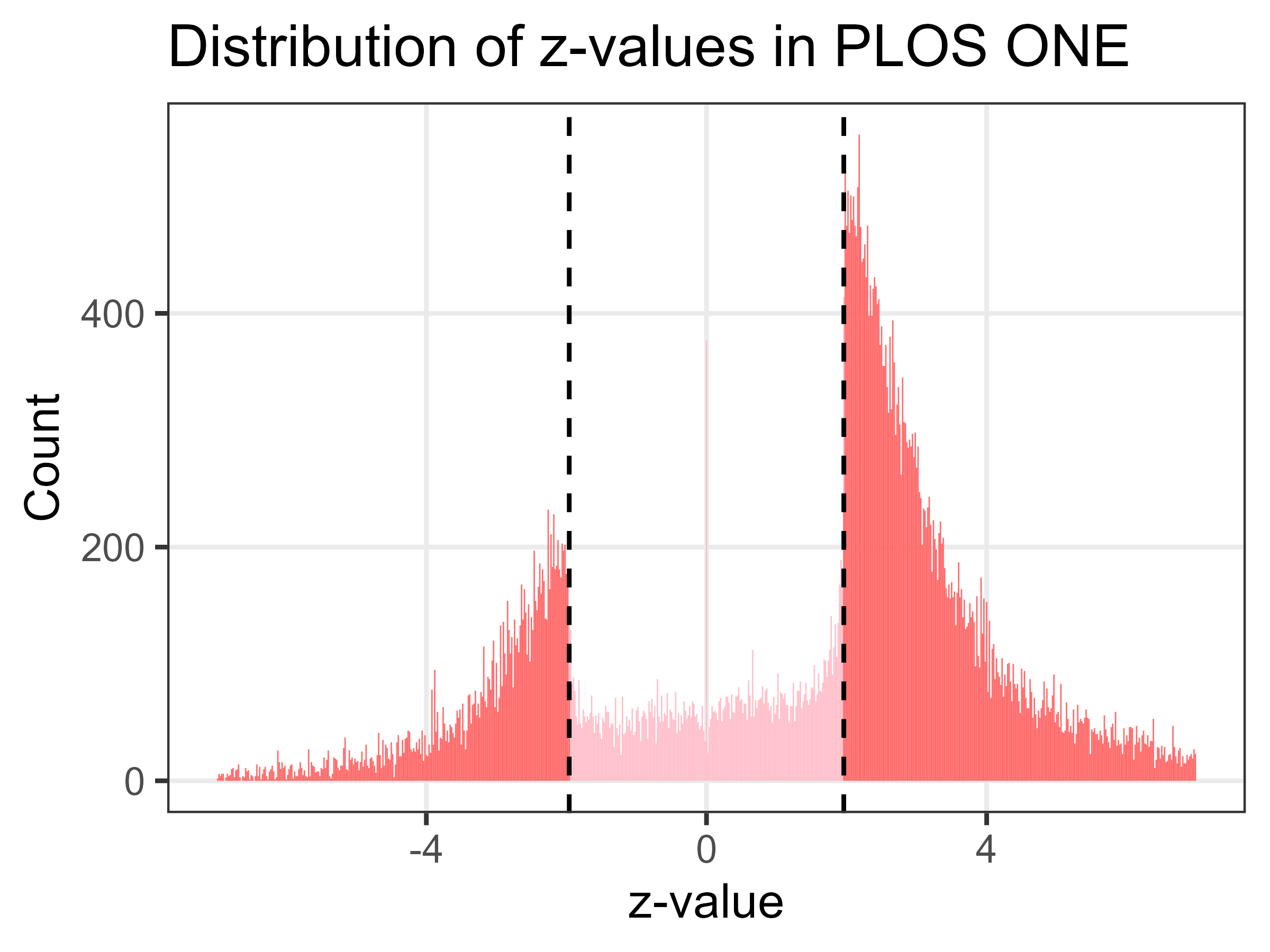

Or maybe experimenters are opting to do further research themselves rather than publish ambiguous results. If you aren't doing MRI or costly field work, fine tuning your experimental design to get a conclusive result is a more attractive option than publishing a null result that could be significant, or a significant result that you fear might need retracting later.

While this might seem reasonable at first, I feel it is at odds with the current state of modern science, where results are no longer the product of individuals like Newton or the Curie's, but rather whole teams and even organizations, working across universities and across/out of this world. The thought of hoarding a topic to oneself until it's ripe seems more akin to commercial or military pursuits rather than of academia.

But that gut feeling aside, withholding data does have a cost, be it more pedestrians being hit by cars or bunk science taking longer to disprove. At some point, a prolonged delay or shelving data outright becomes unethical.

I'm not sure I agree. Science is constrained by human realities, like funding, timelines, and lifespans. If researchers are collecting grants for research, I think it's fair for the benefactors to expect the fruits of the investment -- in the form of published data -- even if it's not perfect and conclusory or even if the lead author dies before the follow-up research is approved. Allowing someone else to later pick up the baton is not weakness but humility.

In some ways, I feel that "publish or perish" could actually be a workable framework if it had the right incentives. No, we don't want researchers torturing the data until there's a sexy conclusion. But we do want researchers to work together, either in parallel for a shared conclusion, or by building on existing work. Yes, we want repeat experiments to double check conclusions, because people make mistakes. No, we don't want ten research groups fighting against each other to be first to print, wasting nine redundant efforts.

I'm not aware of papers getting retracted because their conclusion was later disproved, but rather because their procedure was unsound. Science is a process, honing towards the truth -- whatever it may be -- and accepting its results, or sometimes its lack of results.

I mean I'm speaking from first hand experience in academia. Like I mentioned, this obviously isn't the case for people running prohibitively costly experiments, but is absolutely the case for teams where acquiring more data just means throwing a few more weeks of time at the lab, the grunt work is being done by the students usually anyways. There are a lot more labs in existence that consist of just a PI and 5-10 grad students/post-docs than there are mega labs working cern.

There were a handful of times I remember rerunning an experiment that was on the cusp, either to solidify a result or to rule out a significant finding that I correctly suspected was just luck - what is another 3 weeks of data collection when you are spending up to a year designing/planning/iterating/writing to get the publication?