Hello,

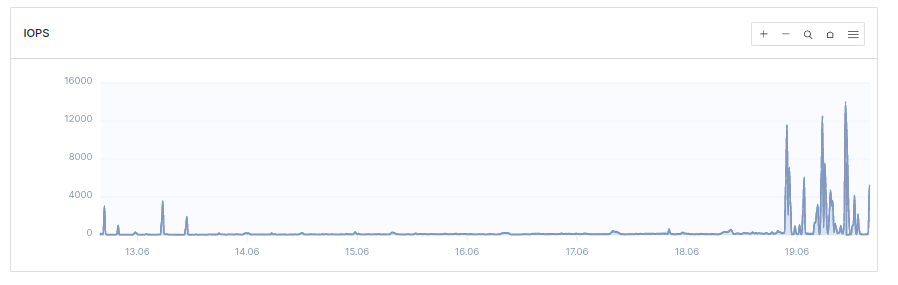

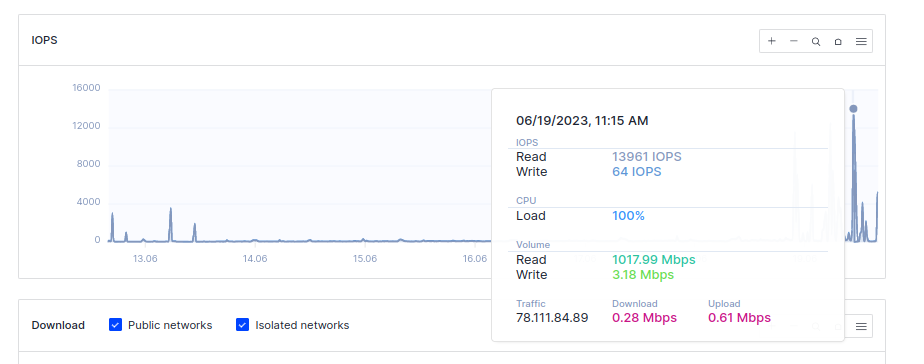

In the last 24 hours my instance has been experiencing spikes in CPU usage. During these spikes, the instance is unreachable.

The spikes are not correlated with an increase in bandwidth use. They are strongly correlated with IOPS spikes, specifically with read operations.

Analysis of htop during these spikes shows /app/lemmy and the postgres database UPDATE operation as the potential culprits, with the postgres UPDATE being my main suspicion.

Looking through the postgres logs at the time of these spikes, I think that this block may be associated with these spikes:

spoiler

2023-06-19 14:28:51.137 UTC [1908] STATEMENT: SELECT "comment"."id", "comment"."creator_id", "comment"."post_id", "comment"."content", "comment"."removed", "comment"."published", "comment"."updated", "comment"."deleted", "comment"."ap_id", "comment"."local", "comment"."path", "comment"."distinguished", "comment"."language_id", "person"."id", "person"."name", "person"."display_name", "person"."avatar", "person"."banned", "person"."published", "person"."updated", "person"."actor_id", "person"."bio", "person"."local", "person"."banner", "person"."deleted", "person"."inbox_url", "person"."shared_inbox_url", "person"."matrix_user_id", "person"."admin", "person"."bot_account", "person"."ban_expires", "person"."instance_id", "post"."id", "post"."name", "post"."url", "post"."body", "post"."creator_id", "post"."community_id", "post"."removed", "post"."locked", "post"."published", "post"."updated", "post"."deleted", "post"."nsfw", "post"."embed_title", "post"."embed_description", "post"."embed_video_url", "post"."thumbnail_url", "post"."ap_id", "post"."local", "post"."language_id", "post"."featured_community", "post"."featured_local", "community"."id", "community"."name", "community"."title", "community"."description", "community"."removed", "community"."published", "community"."updated", "community"."deleted", "community"."nsfw", "community"."actor_id", "community"."local", "community"."icon", "community"."banner", "community"."hidden", "community"."posting_restricted_to_mods", "community"."instance_id", "comment_aggregates"."id", "comment_aggregates"."comment_id", "comment_aggregates"."score", "comment_aggregates"."upvotes", "comment_aggregates"."downvotes", "comment_aggregates"."published", "comment_aggregates"."child_count", "comment_aggregates"."hot_rank", "community_person_ban"."id", "community_person_ban"."community_id", "community_person_ban"."person_id", "community_person_ban"."published", "community_person_ban"."expires", "community_follower"."id", "community_follower"."community_id", "community_follower"."person_id", "community_follower"."published", "community_follower"."pending", "comment_saved"."id", "comment_saved"."comment_id", "comment_saved"."person_id", "comment_saved"."published", "person_block"."id", "person_block"."person_id", "person_block"."target_id", "person_block"."published", "comment_like"."score" FROM ((((((((((("comment" INNER JOIN "person" ON ("comment"."creator_id" = "person"."id")) INNER JOIN "post" ON ("comment"."post_id" = "post"."id")) INNER JOIN "community" ON ("post"."community_id" = "community"."id")) INNER JOIN "comment_aggregates" ON ("comment_aggregates"."comment_id" = "comment"."id")) LEFT OUTER JOIN "community_person_ban" ON ((("community"."id" = "community_person_ban"."community_id") AND ("community_person_ban"."person_id" = "comment"."creator_id")) AND (("community_person_ban"."expires" IS NULL) OR ("community_person_ban"."expires" > CURRENT_TIMESTAMP)))) LEFT OUTER JOIN "community_follower" ON (("post"."community_id" = "community_follower"."community_id") AND ("community_follower"."person_id" = $1))) LEFT OUTER JOIN "comment_saved" ON (("comment"."id" = "comment_saved"."comment_id") AND ("comment_saved"."person_id" = $2))) LEFT OUTER JOIN "person_block" ON (("comment"."creator_id" = "person_block"."target_id") AND ("person_block"."person_id" = $3))) LEFT OUTER JOIN "community_block" ON (("community"."id" = "community_block"."community_id") AND ("community_block"."person_id" = $4))) LEFT OUTER JOIN "comment_like" ON (("comment"."id" = "comment_like"."comment_id") AND ("comment_like"."person_id" = $5))) LEFT OUTER JOIN "local_user_language" ON (("comment"."language_id" = "local_user_language"."language_id") AND ("local_user_language"."local_user_id" = $6))) WHERE (((((("community"."hidden" = $7) OR ("community_follower"."person_id" = $8)) AND ("local_user_language"."language_id" IS NOT NULL)) AND ("community_block"."person_id" IS NULL)) AND ("person_block"."person_id" IS NULL)) AND (nlevel("comment"."path") <= $9)) ORDER BY subpath("comment"."path", $10, $11), "comment_aggregates"."hot_rank" DESC LIMIT $12 OFFSET $13

2023-06-19 14:28:51.157 UTC [1] LOG: background worker "parallel worker" (PID 1907) exited with exit code 1

2023-06-19 14:28:51.246 UTC [1] LOG: background worker "parallel worker" (PID 1908) exited with exit code 1

2023-06-19 14:28:55.228 UTC [48] ERROR: could not resize shared memory segment "/PostgreSQL.3267719818" to 8388608 bytes: No space left on device

Has anyone else faced this issue? One idea is that the database has grown to the point that my VPS does not have enough CPU resources to handle a common routine operation... But that does not explain to me the sudden appearance of the spikes - I would have expected a gradual increase in the size of the spikes over time.