this post was submitted on 21 Oct 2024

528 points (98.0% liked)

Facepalm

2706 readers

1 users here now

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

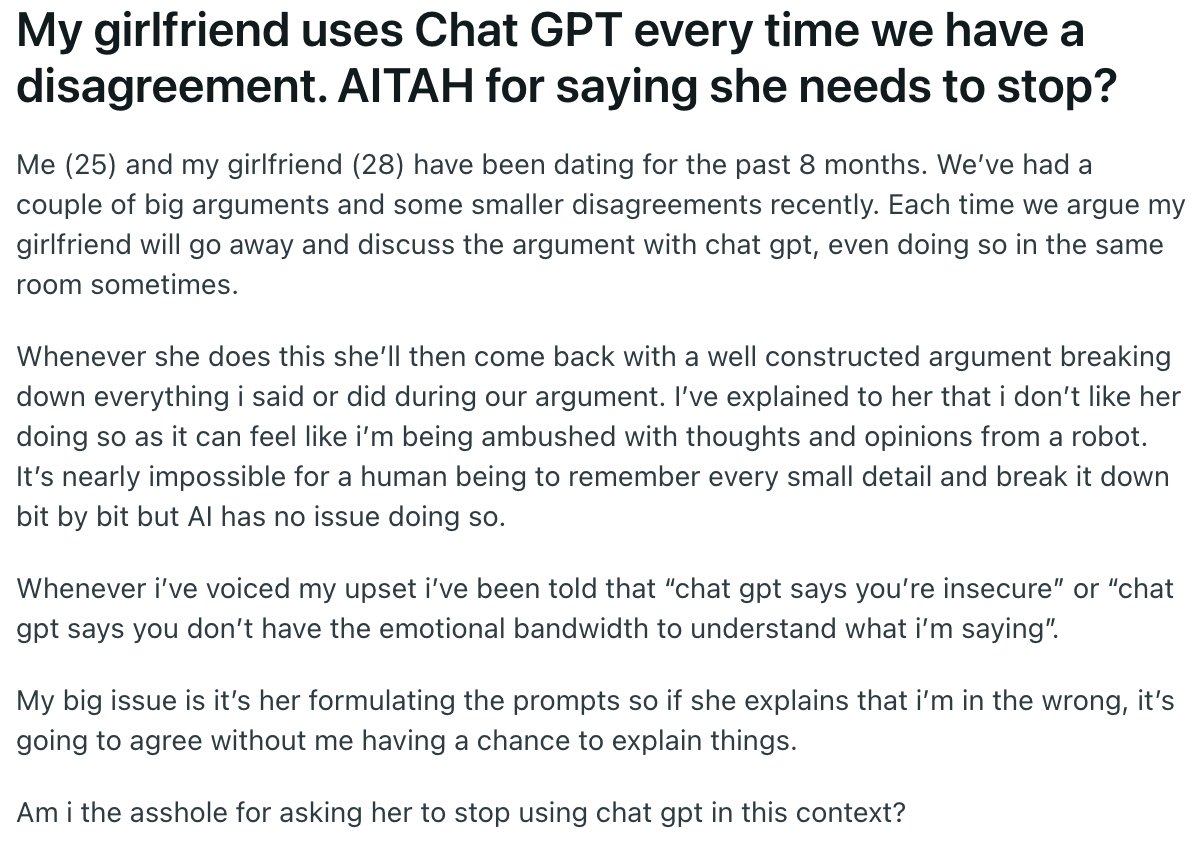

I've used chatGPT for argument advice before. Not, like, weaponizing it "hahah robot says you're wrong! Checkmate!" but more sanity testing, do these arguments make sense, etc.

I always try to strip identifying information from the stuff I input, so it HAS to pick a side. It gets it "right" (siding with the author/me) about half the time, it feels. Usually I'll ask it to break down each sides argument individually, then choose one it agrees with and give a why.

I've used it to tone down the language I wanted to use in an angry email. I wrote the draft that I wanted to send, and then copied it in and said "What's a more diplomatic way to write this?" It gave a very milquetoast revision, so I said "Keep it diplomatic, but a little bit more assertive," and it gave me another, better draft. Then I rewrote the parts that were obviously in robot voice so they were more plausibly like something I would write, and I felt pretty good about that response.

The technology has its uses, but good God, if you don't actually know what you're talking about when you use it, it's going to feed you dogshit and tell you it's caviar, and you aren't going to know the difference.

Flip a coin instead

Coins don't usually offer a reason and explanation for the result. The valuable bit isn't often just the answer itself, it's the process used to arrive at them. That's why I tell it to give me rationale.

Still obsessive.