I'm proud to share a major development status update of XPipe, a new connection hub that allows you to access your entire server infrastructure from your local desktop. It works on top of your installed command-line programs and does not require any setup on your remote systems. So if you normally use CLI tools like ssh, docker, kubectl, etc. to connect to your servers, it will automatically integrate with them.

Here is how it looks like if you haven't seen it before:

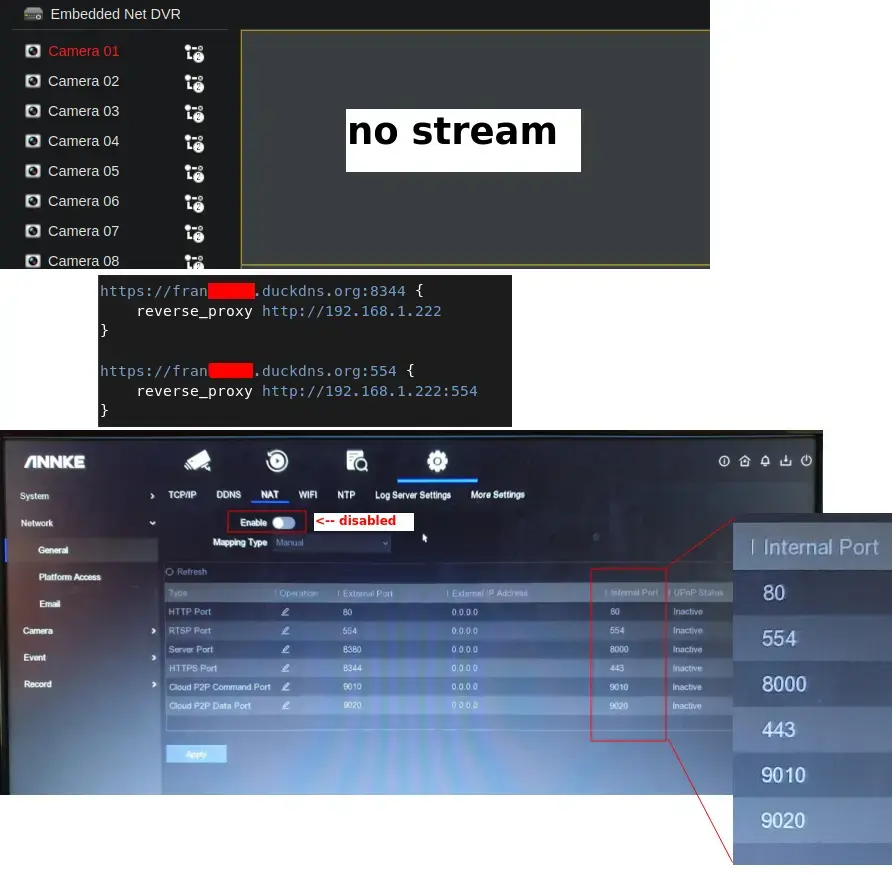

Local forwarding for services

Many systems run a variety of different services such as web services and others. There is now support to detect, forward, and open the services. For example, if you are running a web service on a remote container, you can automatically forward the service port via SSH tunnels, allowing you to access these services from your local machine, e.g., in a web browser. These service tunnels can be toggled at any time. The port forwarding supports specifying a custom local target port and also works for connections with multiple intermediate systems through chained tunnels. For containers, services are automatically detected via their exposed mapped ports. For other systems, you can manually add services via their port.

Markdown notes

Another feature commonly requested was the ability to create and share notes for connections. As Markdown is everywhere nowadays, it makes sense so to implement any kind of note-taking functionality with Markdown. So you can now add notes to any connection with Markdown. The full spec is supported. The editing is delegated to a local editor of your choice, so you can have access to advanced editing features and syntax highlighting there.

Proxmox improvements

You can now automatically open the Proxmox dashboard website through the new service integration. This will also work with the service tunneling feature for remote servers.

You can now open VNC sessions to Proxmox VMs.

The Proxmox support has been reworked to support one non-enterprise PVE node in the community edition.

Scripting improvements

The scripting system has been reworked. There have been several issues with it being clunky and not fun to use. The new system allows you to assign each script one of multiple execution types. Based on these execution types, you can make scripts active or inactive with a toggle. If they are active, the scripts will apply in the selected use cases. There currently are these types:

- Init scripts: When enabled, they will automatically run on init in all compatible shells. This is useful for setting things like aliases consistently

- Shell scripts: When enabled, they will be copied over to the target system and put into the PATH. You can then call them in a normal shell session by their name, e.g.

myscript.sh, also with arguments.

- File scripts: When enabled, you can call them in the file browser with the selected files as arguments. Useful to perform common actions with files

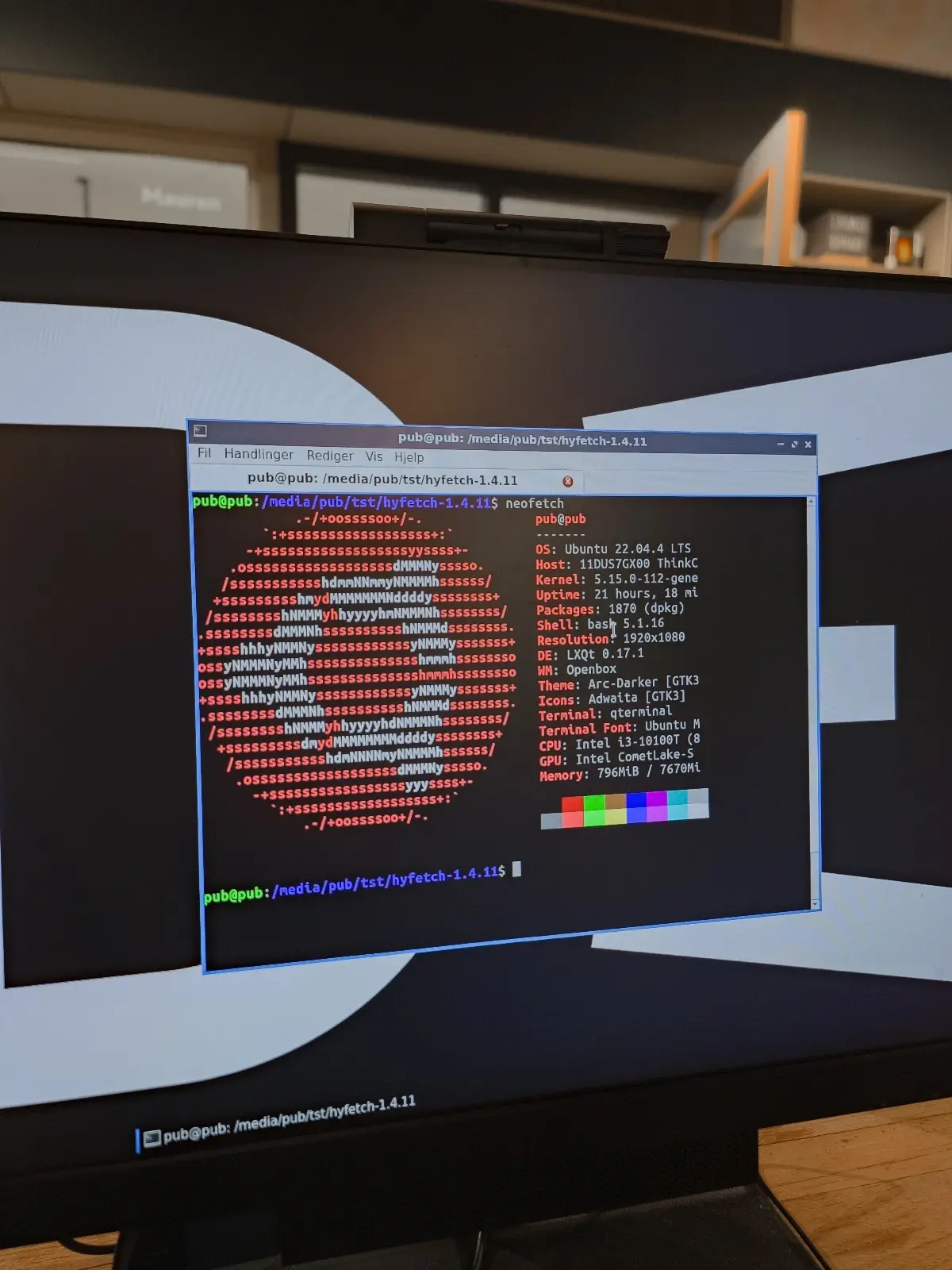

AppImage support

There are now AppImage releases available for x86_64 and arm64 platforms.

While there were already other artifact types available for most Linux systems, this is useful for atomic distributions.

A new HTTP API

For a programmatic approach to manage connections, XPipe 10 comes with a built-in HTTP server that can handle all kinds of local API requests. There is an openapi.yml spec file that contains all API definitions and code samples to send the requests.

To start off, you can query connections based on various filters. With the matched connections, you can start remote shell sessions and for each one and run arbitrary commands in them. You get the command exit code and output as a response, allowing you to adapt your control flow based on command outputs. Any kind of passwords and other secrets are automatically provided by XPipe when establishing a shell connection. You can also access the file systems via these shell connections to read and write remote files.

A note on the open-source model

Since it has come up a few times, in addition to the note in the git repository, I would like to clarify that XPipe is not fully FOSS software. The core that you can find on GitHub is Apache 2.0 licensed, but the distribution you download ships with closed-source extensions. There's also a licensing system in place as I am trying to make a living out of this. I understand that this is a deal-breaker for some, so I wanted to give a heads-up.

The system is designed to allow for unlimited usage in non-commercial environments and only requires a license for more enterprise-level environments. This system is never going to be perfect as there is not a very clear separation in what kind of systems are used in, for example, homelabs and enterprises. But I try my best to give users as many free features as possible for their personal environments.

Outlook

If this project sounds interesting to you, you can check it out on GitHub! There are more features to come in the near future.

Enjoy!