I don't think LLMs should be taken down, it would be impossible for that to happen. I do, however think it should be forced into open source.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

Yes. I'd also add that current copyright laws are archaic and counterproductive when combined with modern technology.

Creators need protection, but only for 15 years. Not death + 70 years.

Okay that's just stupid. I'm really fond of AI but that's just common Greed.

"Free the Serfs?! We can't survive without their labor!!" "Stop Child labour?! We can't survive without them!" "40 Hour Work Week?! We can't survive without their 16 Hour work Days!"

If you can't make profit yet, then fucking stop.

Am I the only person that remembers that it was "you wouldn't steal a car" or has everyone just decided to pretend it was "you wouldn't download a car" because that's easier to dunk on.

People remember the parody, which is usually modified to be more recognizable. Like Darth Vader never said "Luke, I am your father"; in the movie it's actually "No, I am your father".

While I agree that using copyrighted material to train your model is not theft, text that model produces can very much be plagiarism and OpenAI should be on the hook when it occurs.

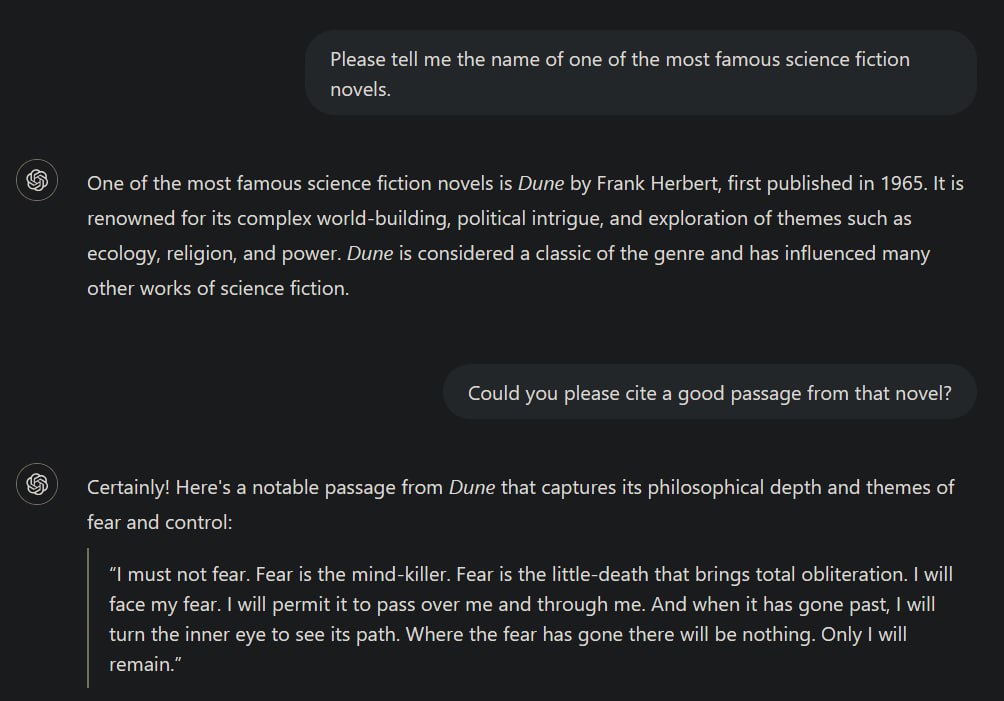

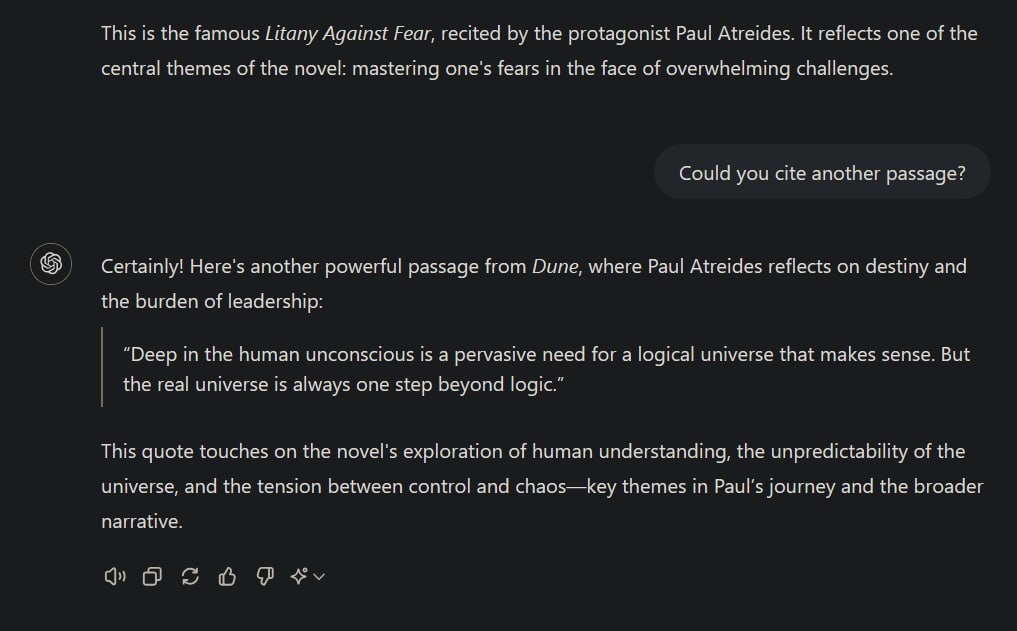

When AI systems ingest copyrighted works, they're extracting general patterns and concepts - the "Bob Dylan-ness" or "Hemingway-ness" - not copying specific text or images.

Okay.

This process is akin to how humans learn by reading widely and absorbing styles and techniques, rather than memorizing and reproducing exact passages. The AI discards the original text, keeping only abstract representations in "vector space".

Citation needed. I’m pretty sure LLMs have exactly reproduced copyrighted passages. And considering it can created detailed summaries of copyrighted texts, it obviously has to save more than “abstract representations.”

We have hundreds of years of out of copyright books and newspapers. I look forward to interacting with old-timey AI.

"Fiddle sticks! These mechanical horses will never catch on! They're far too loud and barely more faster than a man can run!"

"A Woman's place is raising children and tending to the house! If they get the vote, what will they demand next!? To earn a Man's wage!?"

That last one is still relevant to today's discourse somehow!?

As someone who researched AI pre-GPT to enhance human creativity and aid in creative workflows, it's sad for me to see the direction it's been marketed, but not surprised. I'm personally excited by the tech because I personally see a really positive place for it where the data usage is arguably justified, but we either need to break through the current applications of it which seems more aimed at stock prices and wow-factoring the public instead of using them for what they're best at.

The whole exciting part of these was that it could convert unstructured inputs into natural language and structured outputs. Translation tasks (broad definition of translation), extracting key data points in unstructured data, language tasks. It's outstanding for the NLP tasks we struggled with previously, and these tasks are highly transformative or any inputs, it purely relies on structural patterns. I think few people would argue NLP tasks are infringing on the copyright owner.

But I can at least see how moving the direction toward (particularly with MoE approaches) using Q&A data to support generating Q&A outputs, media data to support generating media outputs, using code data to support generating code, this moves toward the territory of affecting sales and using someone's IP to compete against them. From a technical perspective, I understand how LLMs are not really copying, but the way they are marketed and tuned seems to be more and more intended to use people's data to compete against them, which is dubious at best.

This process is akin to how humans learn by reading widely and absorbing styles and techniques, rather than memorizing and reproducing exact passages.

Many people quote this part saying that this is not the case and this is the main reason why the argument is not valid.

Let's take a step back and not put in discussion how current "AI" learns vs how human learn.

The key point for me here is that humans DO PAY (or at least are expected to...) to use and learn from copyrighted material. So if we're equating "AI" method of learning with humans', both should be subject to the the same rules and regulations. Meaning that "AI" should pay for using copyrighted material.

Even if you come to the conclusion that these models should be allowed to "learn" from copyrighted material, the issue is that they can and will reproduce copyrighted material.

They might not recreate a picture of Mickey Mouse that exists already, but they will draw a picture of Mickey Mouse. Just like I could, except I'm aware that I can't monetize it in any way. Well, new Mickey Mouse.

Those claiming AI training on copyrighted works is "theft" misunderstand key aspects of copyright law and AI technology. Copyright protects specific expressions of ideas, not the ideas themselves.

Sure.

When AI systems ingest copyrighted works, they're extracting general patterns and concepts - the "Bob Dylan-ness" or "Hemingway-ness" - not copying specific text or images.

Not really. Sure, they take input and garble it up and it is "transformative" - but so is a human watching a TV series on a pirate site, for example. Hell, it's eduactional is treated as a copyright violation.

This process is akin to how humans learn by reading widely and absorbing styles and techniques, rather than memorizing and reproducing exact passages.

Perhaps. (Not an AI expert). But, as the law currently stands, only living and breathing persons can be educated, so the "educational" fair use protection doesn't stand.

The AI discards the original text, keeping only abstract representations in "vector space". When generating new content, the AI isn't recreating copyrighted works, but producing new expressions inspired by the concepts it's learned.

It does and it doesn't discard the original. It isn't impossible to recreate the original (since all the data it gobbled up gets stored somewhere in some shape or form and can be truthfully recreated, at least judging by a few comments bellow and news reports). So AI can and does recreate (duplicate or distribute, perhaps) copyrighted works.

Besides, for a copyright violation, "substantial similarity" is needed, not one-for-one reproduction.

This is fundamentally different from copying a book or song.

Again, not really.

It's more like the long-standing artistic tradition of being influenced by others' work.

Sure. Except when it isn't and the AI pumps out the original or something close enoigh to it.

The law has always recognized that ideas themselves can't be owned - only particular expressions of them.

I'd be careful with the "always" part. There was a famous case involving Katy Perry where a single chord was sued over as copyright infringement. The case was thrown out on appeal, but I do not doubt that some pretty wild cases have been upheld as copyright violations (see "patent troll").

Moreover, there's precedent for this kind of use being considered "transformative" and thus fair use. The Google Books project, which scanned millions of books to create a searchable index, was ruled legal despite protests from authors and publishers. AI training is arguably even more transformative.

The problem is that Google books only lets you search some phrase and have it pop up as beibg from source xy. It doesn't have the capability of reproducing it (other than maybe the page it was on perhaps) - well, it does have the capability since it's in the index somewhere, but there are checks in place to make sure it doesn't happen, which seem to be yet unachieved in AI.

While it's understandable that creators feel uneasy about this new technology, labeling it "theft" is both legally and technically inaccurate.

Yes. Just as labeling piracy as theft is.

We may need new ways to support and compensate creators in the AI age, but that doesn't make the current use of copyrighted works for AI training illegal or

Yes, new legislation will made to either let "Big AI" do as it pleases, or prevent it from doing so. Or, as usual, it'll be somewhere inbetween and vary from jurisdiction to jurisdiction.

However,

that doesn't make the current use of copyrighted works for AI training illegal or unethical.

this doesn't really stand. Sure, morals are debatable and while I'd say it is more unethical as private piracy (so no distribution) since distribution and disemination is involved, you do not seem to feel the same.

However, the law is clear. Private piracy (as in recording a song off of radio, a TV broadcast, screen recording a Netflix movie, etc. are all legal. As is digitizing books and lending the digital (as long as you have a physical copy that isn't lended out as the same time representing the legal "original"). I think breaking DRM also isn't illegal (but someone please correct me if I'm wrong).

The problems arises when the pirated content is copied and distributed in an uncontrolled manner, which AI seems to be capable of, making the AI owner as liable of piracy if the AI reproduced not even the same, but "substantially similar" output, just as much as hosts of "classic" pirated content distributed on the Web.

Obligatory IANAL and as far as the law goes, I focused on US law since the default country on here is the US. Similar or different laws are on the books in other places, although most are in fact substantially similar. Also, what the legislators cone up with will definately vary from place to place, even more so than copyright law since copyright law is partially harmonised (see Berne convention).

{{labeling it "theft" is both legally and technically inaccurate.}} Well, my understanding is that humans have intelligence, humans teach and learn from previous/other people's work and make progressive or create new work/idea using their own intelligence. AI/machine doesn't have intelligence from the start, doesn't have own intelligence to create/make things. It just copies, remixes, and applies the knowledge, and many personalities and all expressions have been teached. So "theft" is technically accurate.

The "you wouldn't download a car" statement is made against personal cases of piracy, which got rightfully clowned upon. It obviously doesn't work at all when you use its ridiculousness to defend big ass corporations that tries to profit from so many of the stuff they "downloaded".

Besides, it is not "theft". It is "plagiarism". And I'm glad to see that people that tries to defend these plagiarism machines that are attempted to be humanised and inflated to something they can never be, gets clowned. It warms my heart.

Honestly, if this somehow results in regulators being like "fuck it, piracy is legal now" it won't negatively impact me in any way..

Corporations have abused copyright law for decades, they've ruined the internet, they've ruined media, they've ruined video games. I want them to lose more than anything else.

The shitty and likely situation is they'll be like "fuck it corporate piracy is legal but individuals doing it is still a crime".

I've been thinking since my early teens if not earlier that copyright is an outdated law in the digital age. If this dispute leads to more people realizing this, good.

Maybe if OpenAI didn't suddenly decide not to be open when they got in bed with Micro$oft, they could just make it a community effort. I own a copyrighted work that the AI hasn't been feed yet, so I loan it as training and you do the same. They could have made it an open initiative. Missed opportunity from a greedy company. Push the boundaries of technology, and we can all reap the rewards.

There is an easy answer to this, but it's not being pursued by AI companies because it'll make them less money, albeit totally ethically.

Make all LLM models free to use, regardless of sophistication, and be collaborative with sharing the algorithms. They don't have to be open to everyone, but they can look at requests and grant them on merit without charging for it.

So how do they make money? How goes Google search make money? Advertisements. If you have a good, free product, advertisement space will follow. If it's impossible to make an AI product while also properly compensating people for training material, then don't make it a sold product. Use copyright training material freely to offer a free product with no premiums.

This take is correct although I would make one addition. It is true that copyright violation doesn’t happen when copyrighted material is inputted or when models are trained. While the outputs of these models are not necessarily copyright violations, it is possible for them to violate copyright. The same standards for violation that apply to humans should apply to these models.

I entirely reject the claims that there should be one standard for humans and another for these models. Every time this debate pops up, people claim some province based on ‘intelligence’ or ‘conscience’ or ‘understanding’ or ‘awareness’. This is a meaningless argument because we have no clear understanding about what those things are. I’m not claiming anything about the nature of these models. I’m just pointing out that people love to apply an undefined standard to them.

We should apply the same copyright standards to people, models, corporations, and old-school algorithms.

The argument seem most commonly from people on fediverse (which I happen to agree with) is really not about what current copyright laws and treaties say / how they should be interpreted, but how people view things should be (even if it requires changing laws to make it that way).

And it fundamentally comes down to economics - the study of how resources should be distributed. Apart from oligarchs and the wannabe oligarchs who serve as useful idiots for the real oligarchs, pretty much everyone wants a relatively fair and equal distribution of wealth amongst the people (differing between left and right in opinion on exactly how equal things should be, but there is still some common ground). Hardly anyone really wants serfdom or similar where all the wealth and power is concentrated in the hands of a few (obviously it's a spectrum of how concentrated, but very few people want the extreme position to the right).

Depending on how things go, AI technologies have the power to serve humanity and lift everyone up equally if they are widely distributed, removing barriers and breaking existing 'moats' that let a few oligarchs hoard a lot of resources. Or it could go the other way - oligarchs are the only ones that have access to the state of the art model weights, and use this to undercut whatever they want in the economy until they own everything and everyone else rents everything from them on their terms.

The first scenario is a utopia scenario, and the second is a dystopia, and the way AI is regulated is the fork in the road between the two. So of course people are going to want to cheer for regulation that steers towards the utopia.

That means things like:

- Fighting back when the oligarchs try to talk about 'AI Safety' meaning that there should be no Open Source models, and that they should tightly control how and for what the models can be used. The biggest AI Safety issue is that we end up in a dystopian AI-fueled serfdom, and FLOSS models and freedom for the common people to use them actually helps to reduce the chances of this outcome.

- Not allowing 'AI washing' where oligarchs can take humanities collective work, put it through an algorithm, and produce a competing thing that they control - unless everyone has equal access to it. One policy that would work for this would be that if you create a model based on other people's work, and want to use that model for a commercial purpose, then you must publicly release the model and model weights. That would be a fair trade-off for letting them use that information for training purposes.

Fundamentally, all of this is just exacerbating cracks in the copyright system as a policy. I personally think that a better system would look like this:

- Everyone gets a Universal Basic Income paid, and every organisation and individual making profit pays taxes in to fund the UBI (in proportion to their profits).

- All forms of intellectual property rights (except trademarks) are abolished - copyright, patents, and trade secrets are no longer enforced by the law. The UBI replaces it as compensation to creators.

- It is illegal to discriminate against someone for publicly disclosing a work they have access to, as long as they didn't accept valuable consideration to make that disclosure. So for example, if an OpenAI employee publicly released the model weights for one of OpenAI's models without permission from anyone, it would be illegal for OpenAI to demote / fire / refuse to promote / pay them differently on that basis, and for any other company to factor that into their hiring decision. There would be exceptions for personally identifiable information (e.g. you can't release the client list or photos of real people without consequences), and disclosure would have to be public (i.e. not just to a competitor, it has to be to everyone) and uncompensated (i.e. you can't take money from a competitor to release particular information).

If we had that policy, I'd be okay for AI companies to be slurping up everything and training model weights.

However, with the current policies, it is pushing us towards the dystopic path where AI companies take what they want and never give anything back.

You should look at the energy cost of AI. It's not a miracle machine.